Supervised Metric Learning for Retrieval via Contextual Similarity Optimization

Paper and Code

Oct 04, 2022

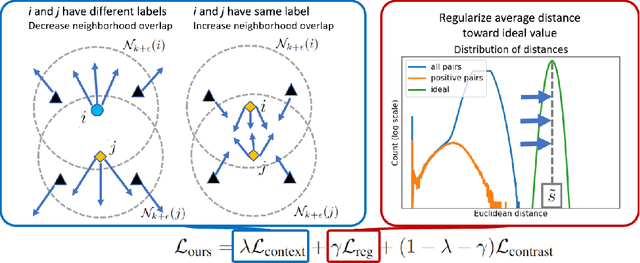

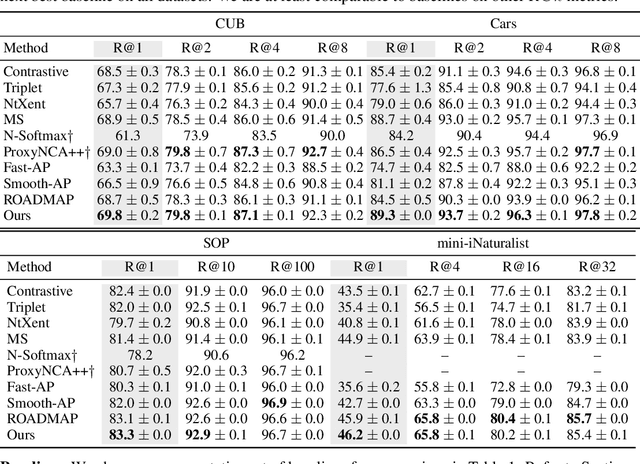

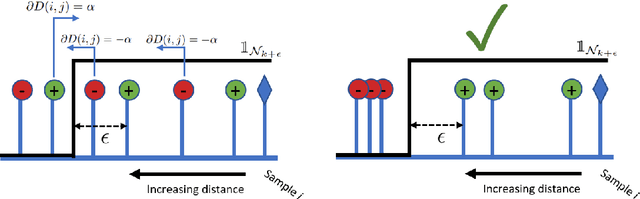

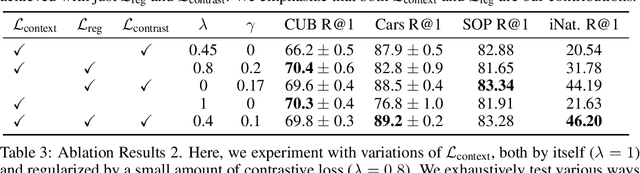

Existing deep metric learning approaches fall into three general categories: contrastive learning, average precision (AP) maximization, and classification. We propose a novel alternative approach, \emph{contextual similarity optimization}, inspired by work in unsupervised metric learning. Contextual similarity is a discrete similarity measure based on relationships between neighborhood sets, and is widely used in the unsupervised setting as pseudo-supervision. Inspired by this success, we propose a framework which optimizes \emph{a combination of contextual and cosine similarities}. Contextual similarity calculation involves several non-differentiable operations, including the heaviside function and intersection of sets. We show how to circumvent non-differentiability to explicitly optimize contextual similarity, and we further incorporate appropriate similarity regularization to yield our novel metric learning loss. The resulting loss function achieves state-of-the-art Recall @ 1 accuracy on standard supervised image retrieval benchmarks when combined with the standard contrastive loss. Code is released here: \url{https://github.com/Chris210634/metric-learning-using-contextual-similarity}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge