Supervised Contrastive Learning on Blended Images for Long-tailed Recognition

Paper and Code

Nov 22, 2022

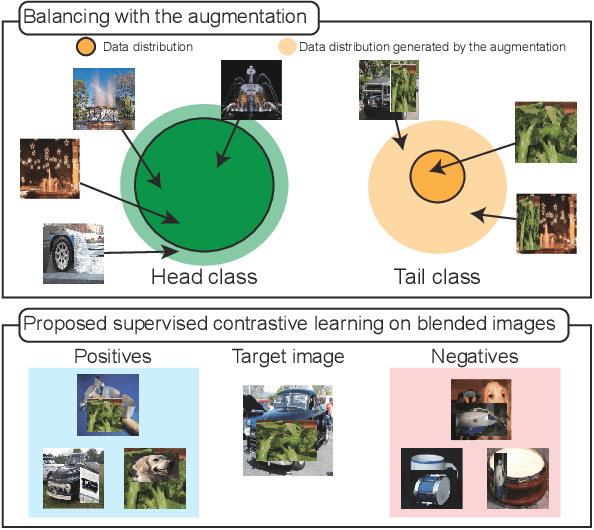

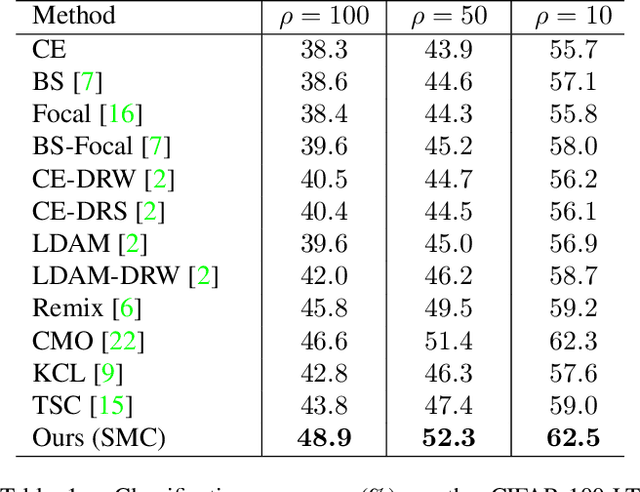

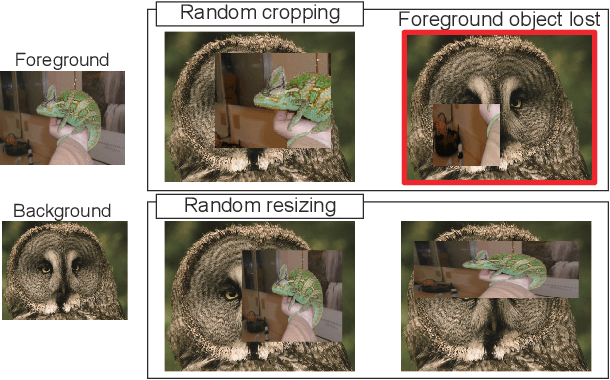

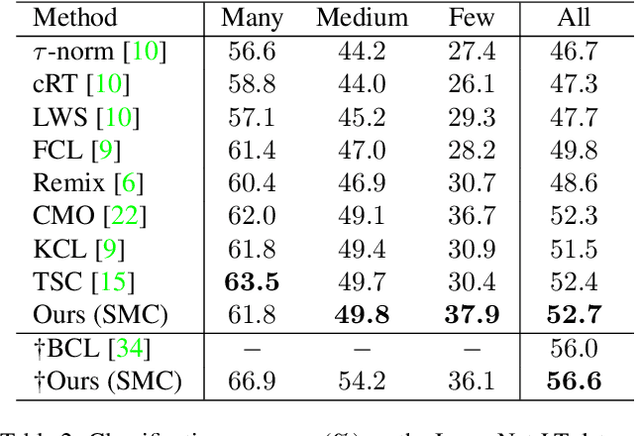

Real-world data often have a long-tailed distribution, where the number of samples per class is not equal over training classes. The imbalanced data form a biased feature space, which deteriorates the performance of the recognition model. In this paper, we propose a novel long-tailed recognition method to balance the latent feature space. First, we introduce a MixUp-based data augmentation technique to reduce the bias of the long-tailed data. Furthermore, we propose a new supervised contrastive learning method, named Supervised contrastive learning on Mixed Classes (SMC), for blended images. SMC creates a set of positives based on the class labels of the original images. The combination ratio of positives weights the positives in the training loss. SMC with the class-mixture-based loss explores more diverse data space, enhancing the generalization capability of the model. Extensive experiments on various benchmarks show the effectiveness of our one-stage training method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge