Superpixel Segmentation: A Long-Lasting Ill-Posed Problem

Paper and Code

Nov 10, 2024

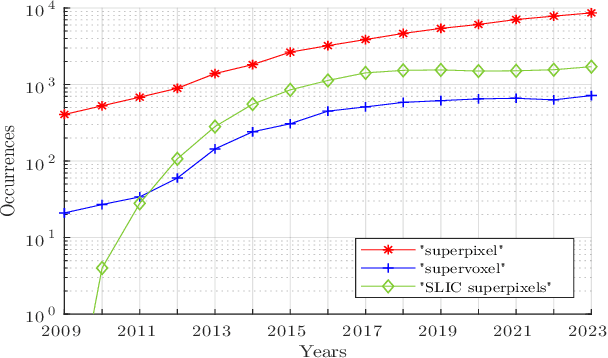

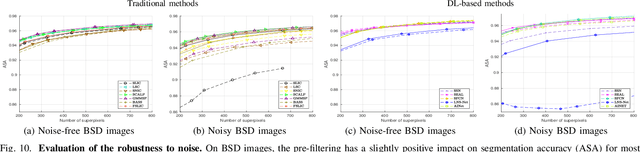

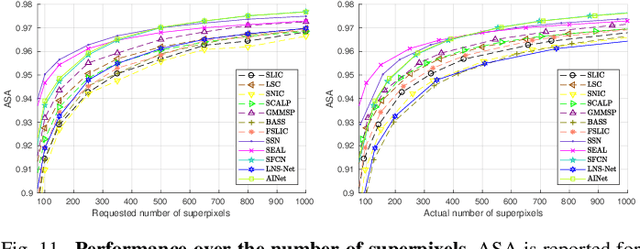

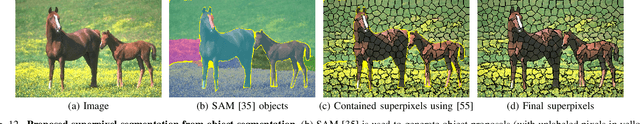

For many years, image over-segmentation into superpixels has been essential to computer vision pipelines, by creating homogeneous and identifiable regions of similar sizes. Such constrained segmentation problem would require a clear definition and specific evaluation criteria. However, the validation framework for superpixel methods, typically viewed as standard object segmentation, has rarely been thoroughly studied. In this work, we first take a step back to show that superpixel segmentation is fundamentally an ill-posed problem, due to the implicit regularity constraint on the shape and size of superpixels. We also demonstrate through a novel comprehensive study that the literature suffers from only evaluating certain aspects, sometimes incorrectly and with inappropriate metrics. Concurrently, recent deep learning-based superpixel methods mainly focus on the object segmentation task at the expense of regularity. In this ill-posed context, we show that we can achieve competitive results using a recent architecture like the Segment Anything Model (SAM), without dedicated training for the superpixel segmentation task. This leads to rethinking superpixel segmentation and the necessary properties depending on the targeted downstream task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge