Style Transfer Generative Adversarial Networks: Learning to Play Chess Differently

Paper and Code

May 07, 2017

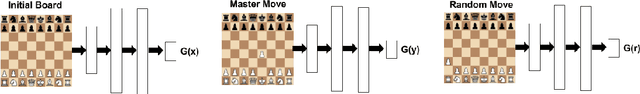

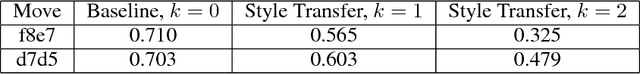

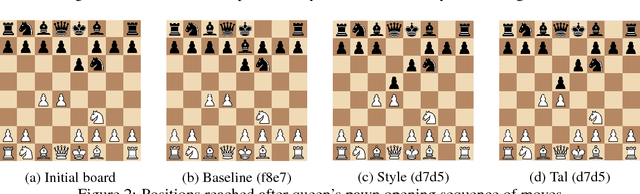

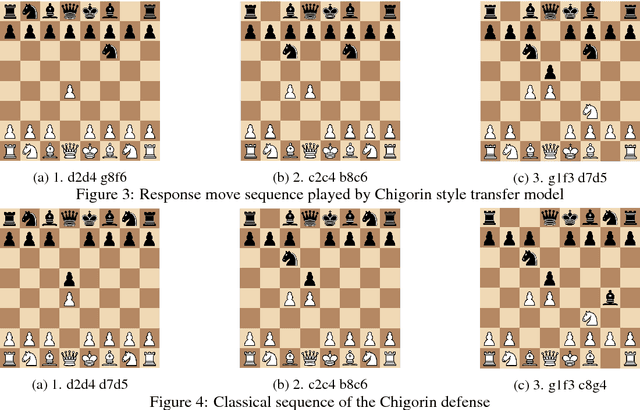

The idea of style transfer has largely only been explored in image-based tasks, which we attribute in part to the specific nature of loss functions used for style transfer. We propose a general formulation of style transfer as an extension of generative adversarial networks, by using a discriminator to regularize a generator with an otherwise separate loss function. We apply our approach to the task of learning to play chess in the style of a specific player, and present empirical evidence for the viability of our approach.

* style transfer, Generative Adversarial Networks

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge