Style-transfer GANs for bridging the domain gap in synthetic pose estimator training

Paper and Code

Apr 28, 2020

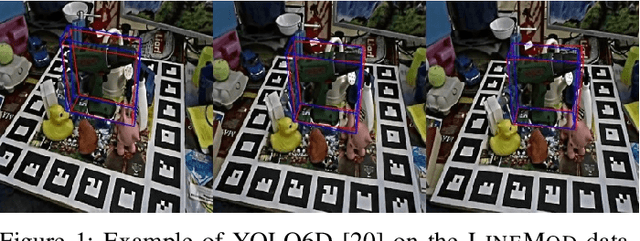

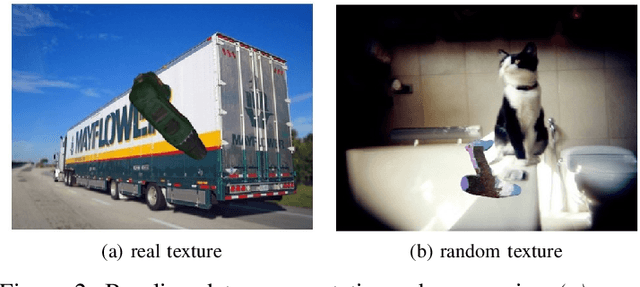

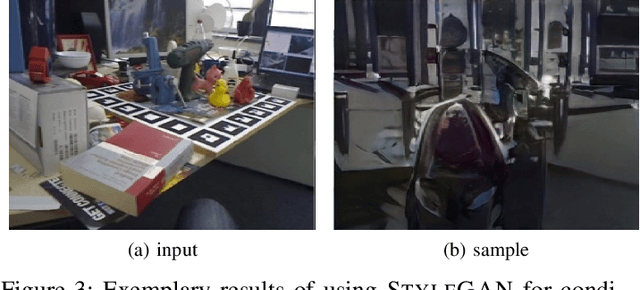

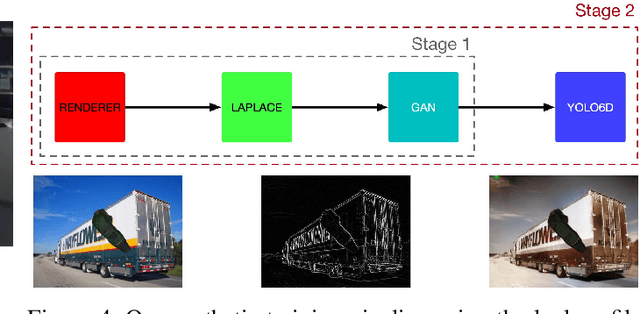

Given the dependency of current CNN architectures on a large training set, the possibility of using synthetic data is alluring as it allows generating a virtually infinite amount of labeled training data. However, producing such data is a non-trivial task as current CNN architectures are sensitive to the domain gap between real and synthetic data. We propose to adopt general-purpose GAN models for pixel-level image translation, allowing to formulate the domain gap itself as a learning problem. Here, we focus on training the single-stage YOLO6D object pose estimator on synthetic CAD geometry only, where not even approximate surface information is available. Our evaluation shows a considerable improvement in model performance when compared to a model trained with the same degree of domain randomization, while requiring only very little additional effort.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge