Structure Learning in Graphical Models from Indirect Observations

Paper and Code

May 06, 2022

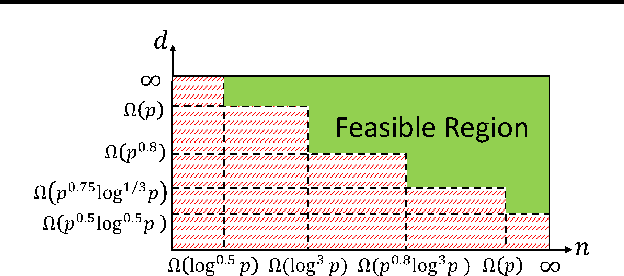

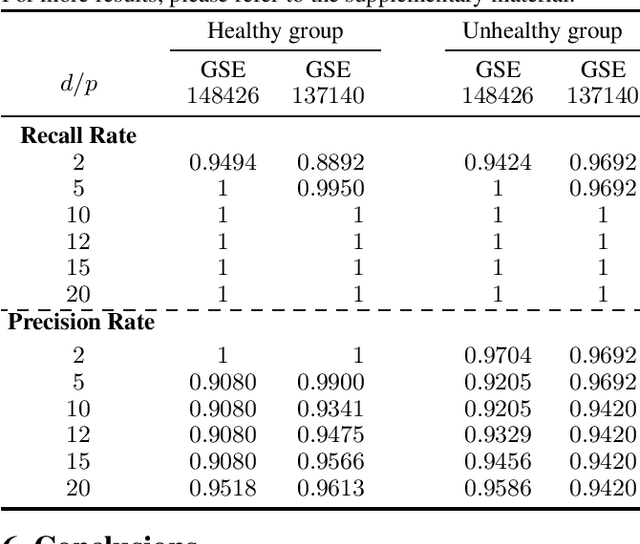

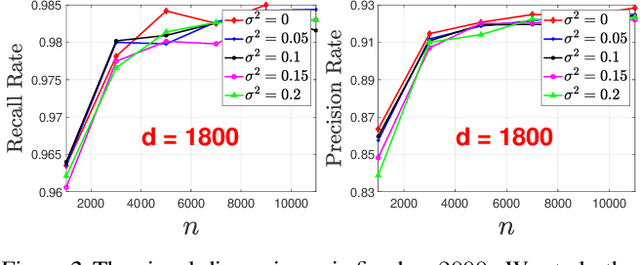

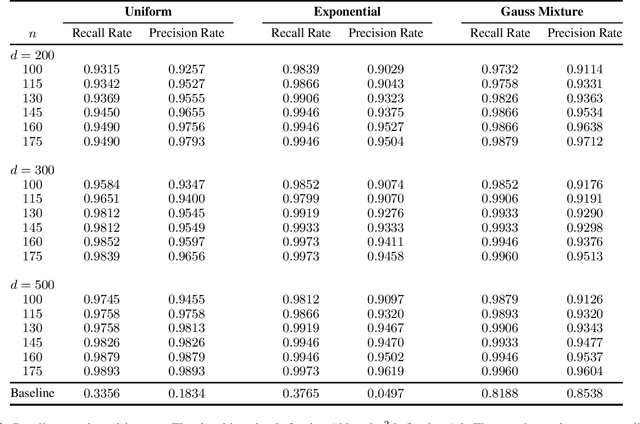

This paper considers learning of the graphical structure of a $p$-dimensional random vector $X \in R^p$ using both parametric and non-parametric methods. Unlike the previous works which observe $x$ directly, we consider the indirect observation scenario in which samples $y$ are collected via a sensing matrix $A \in R^{d\times p}$, and corrupted with some additive noise $w$, i.e, $Y = AX + W$. For the parametric method, we assume $X$ to be Gaussian, i.e., $x\in R^p\sim N(\mu, \Sigma)$ and $\Sigma \in R^{p\times p}$. For the first time, we show that the correct graphical structure can be correctly recovered under the indefinite sensing system ($d < p$) using insufficient samples ($n < p$). In particular, we show that for the exact recovery, we require dimension $d = \Omega(p^{0.8})$ and sample number $n = \Omega(p^{0.8}\log^3 p)$. For the nonparametric method, we assume a nonparanormal distribution for $X$ rather than Gaussian. Under mild conditions, we show that our graph-structure estimator can obtain the correct structure. We derive the minimum sample number $n$ and dimension $d$ as $n\gtrsim (deg)^4 \log^4 n$ and $d \gtrsim p + (deg\cdot\log(d-p))^{\beta/4}$, respectively, where deg is the maximum Markov blanket in the graphical model and $\beta > 0$ is some fixed positive constant. Additionally, we obtain a non-asymptotic uniform bound on the estimation error of the CDF of $X$ from indirect observations with inexact knowledge of the noise distribution. To the best of our knowledge, this bound is derived for the first time and may serve as an independent interest. Numerical experiments on both real-world and synthetic data are provided confirm the theoretical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge