Strong Consistency of Prototype Based Clustering in Probabilistic Space

Paper and Code

Apr 19, 2010

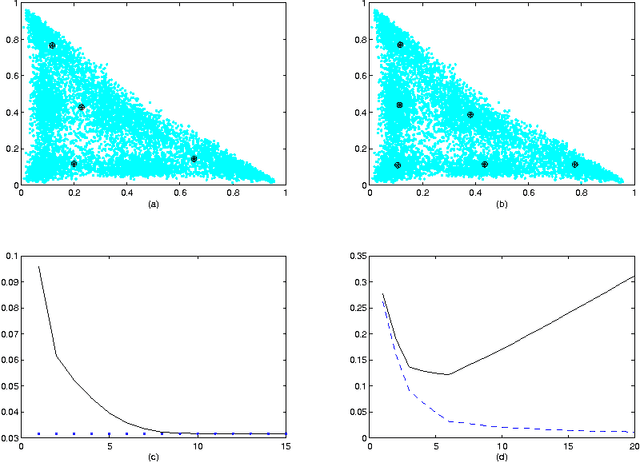

In this paper we formulate in general terms an approach to prove strong consistency of the Empirical Risk Minimisation inductive principle applied to the prototype or distance based clustering. This approach was motivated by the Divisive Information-Theoretic Feature Clustering model in probabilistic space with Kullback-Leibler divergence which may be regarded as a special case within the Clustering Minimisation framework. Also, we propose clustering regularization restricting creation of additional clusters which are not significant or are not essentially different comparing with existing clusters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge