String-based Molecule Generation via Multi-decoder VAE

Paper and Code

Aug 23, 2022

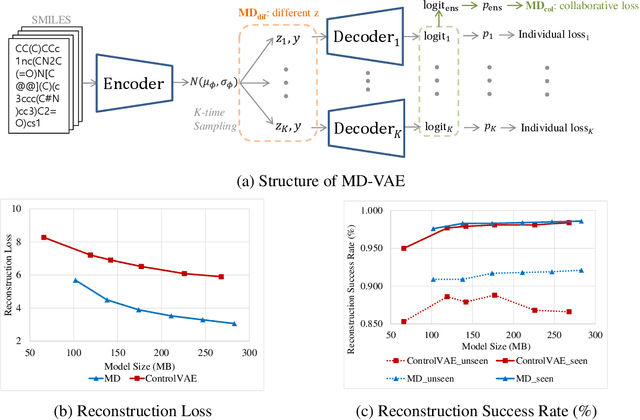

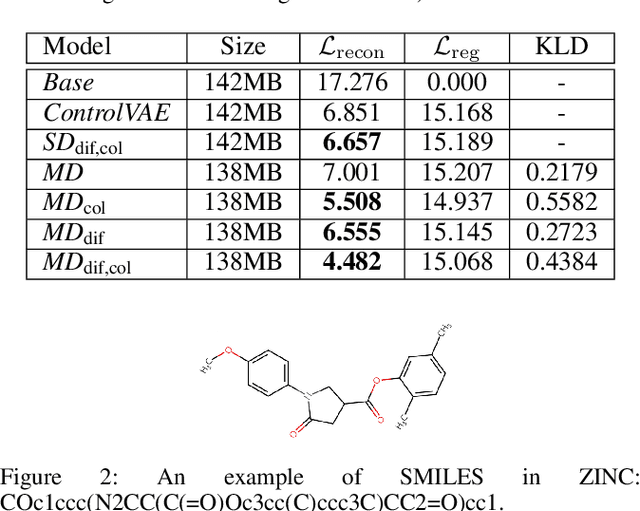

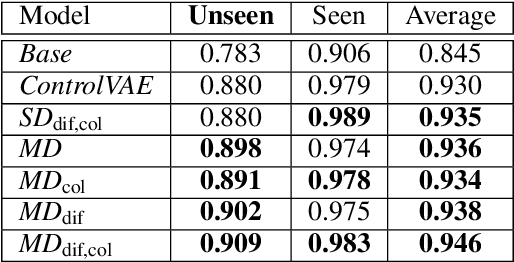

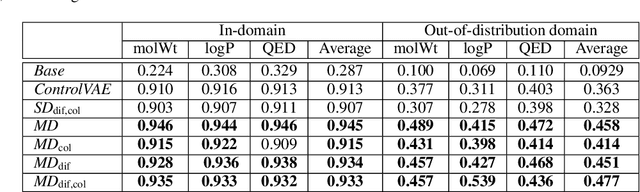

In this paper, we investigate the problem of string-based molecular generation via variational autoencoders (VAEs) that have served a popular generative approach for various tasks in artificial intelligence. We propose a simple, yet effective idea to improve the performance of VAE for the task. Our main idea is to maintain multiple decoders while sharing a single encoder, i.e., it is a type of ensemble techniques. Here, we first found that training each decoder independently may not be effective as the bias of the ensemble decoder increases severely under its auto-regressive inference. To maintain both small bias and variance of the ensemble model, our proposed technique is two-fold: (a) a different latent variable is sampled for each decoder (from estimated mean and variance offered by the shared encoder) to encourage diverse characteristics of decoders and (b) a collaborative loss is used during training to control the aggregated quality of decoders using different latent variables. In our experiments, the proposed VAE model particularly performs well for generating a sample from out-of-domain distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge