Stochastic Video Long-term Interpolation

Paper and Code

Sep 07, 2018

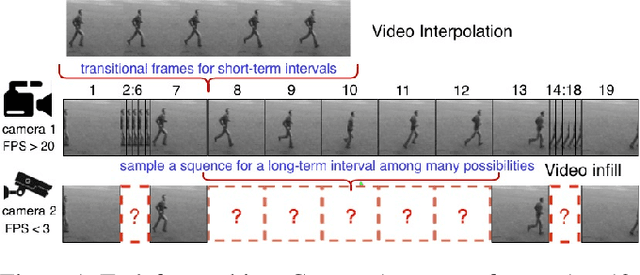

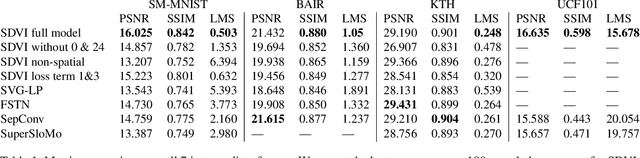

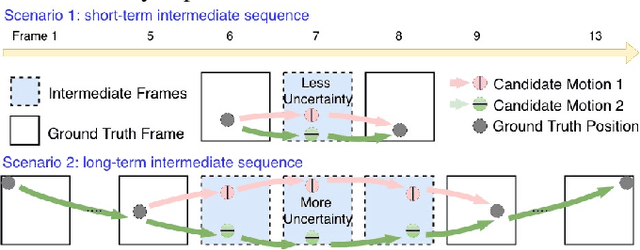

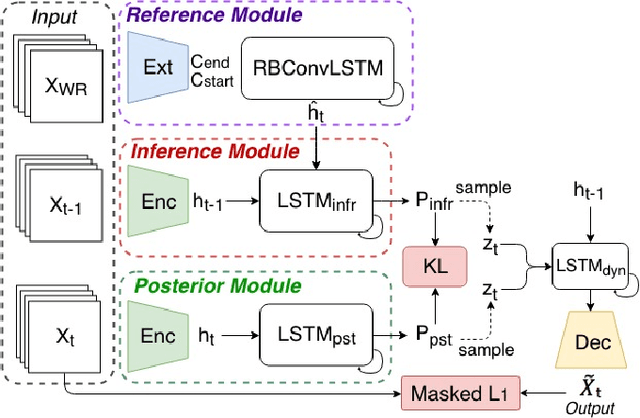

In this paper, we introduce a stochastic learning framework for long-term video interpolation. While most existing interpolation models require two reference frames with a short interval, our framework predicts a plausible intermediate sequence between a long interval. Our model consists of two parts: (1) a deterministic estimation to guarantee the spatial and temporal coherency among frames, (2) a stochastic sampling process to generate dynamics from inferred distributions. Experimental results show that our model is able to generate sharp and clear sequences with variations. Moreover, motions in the generated sequence are realistic and able to transfer smoothly from the referenced start frame to the end frame.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge