Stochastic Trust Region Inexact Newton Method for Large-scale Machine Learning

Paper and Code

Dec 26, 2018

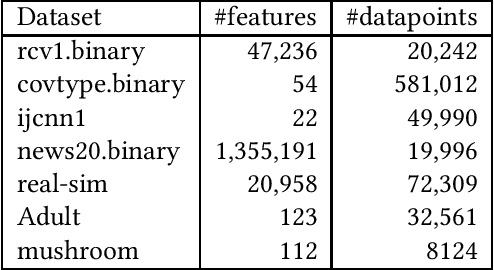

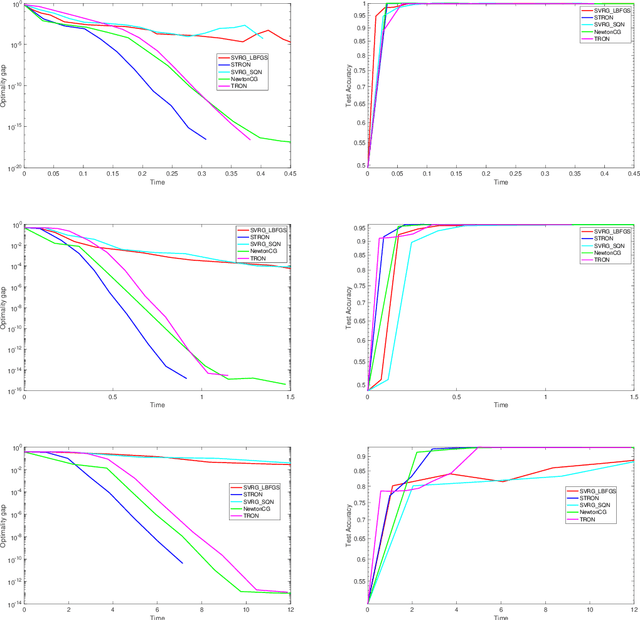

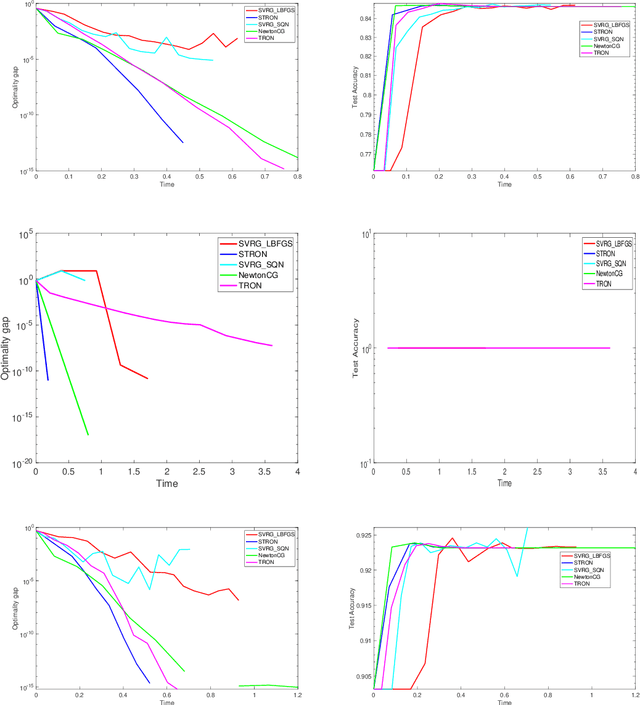

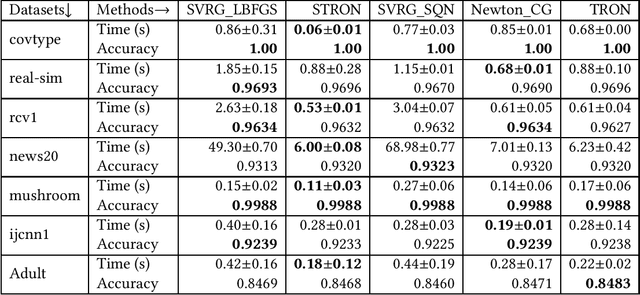

Nowadays stochastic approximation methods are one of the major research direction to deal with the large-scale machine learning problems. From stochastic first order methods, now the focus is shifting to stochastic second order methods due to their faster convergence. In this paper, we have proposed a novel Stochastic Trust RegiOn inexact Newton method, called as STRON, which uses conjugate gradient (CG) to solve trust region subproblem. The method uses progressive subsampling in the calculation of gradient and Hessian values to take the advantage of both stochastic approximation and full batch regimes. We have extended STRON using existing variance reduction techniques to deal with the noisy gradients, and using preconditioned conjugate gradient (PCG) as subproblem solver. We further extend STRON to solve SVM. Finally, the theoretical results prove superlinear convergence for STRON and the empirical results prove the efficacy of the proposed method against existing methods with bench marked datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge