Stochastic Modeling for Learnable Human Pose Triangulation

Paper and Code

Oct 01, 2021

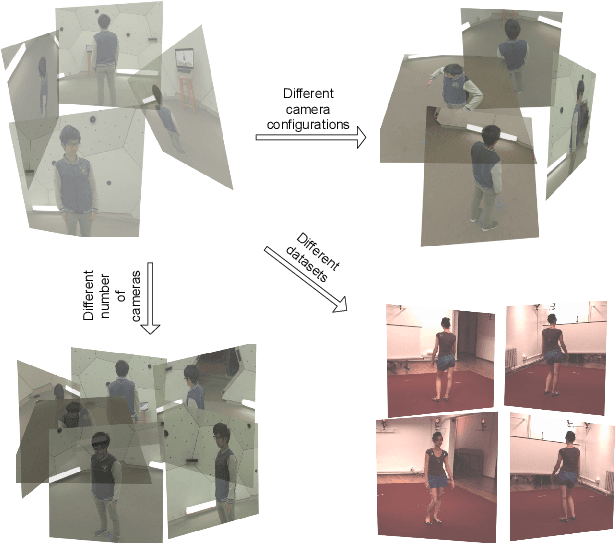

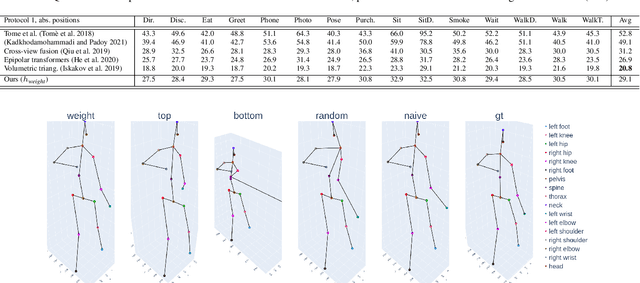

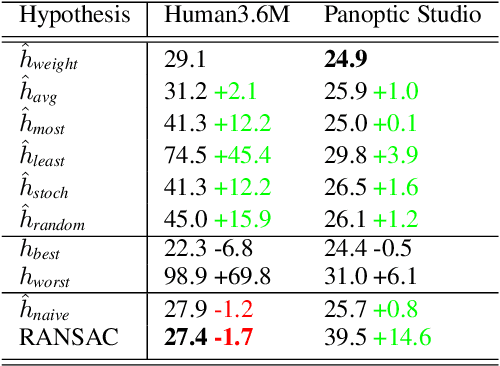

We propose a stochastic modeling framework for 3D human pose triangulation and evaluate its performance across different datasets and spatial camera arrangements. The common approach to 3D pose estimation is to first detect 2D keypoints in images and then apply the triangulation from multiple views. However, the majority of existing triangulation models are limited to a single dataset, i.e. camera arrangement and their number. Moreover, they require known camera parameters. The proposed stochastic pose triangulation model successfully generalizes to different camera arrangements and between two public datasets. In each step, we generate a set of 3D pose hypotheses obtained by triangulation from a random subset of views. The hypotheses are evaluated by a neural network and the expectation of the triangulation error is minimized. The key novelty is that the network learns to evaluate the poses without taking into account the spatial camera arrangement, thus improving generalization. Additionally, we demonstrate that the proposed stochastic framework can also be used for fundamental matrix estimation, showing promising results towards relative camera pose estimation from noisy keypoint correspondences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge