Stealing Neural Networks via Timing Side Channels

Paper and Code

Dec 31, 2018

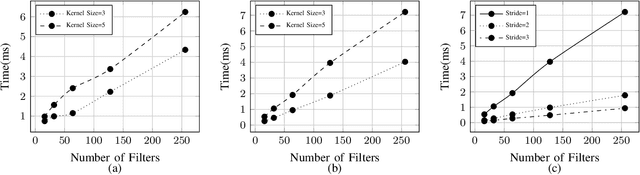

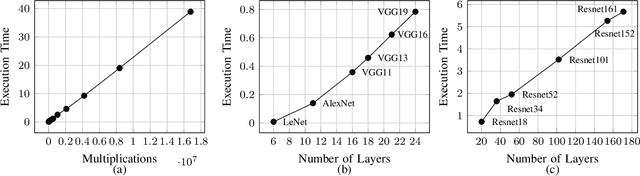

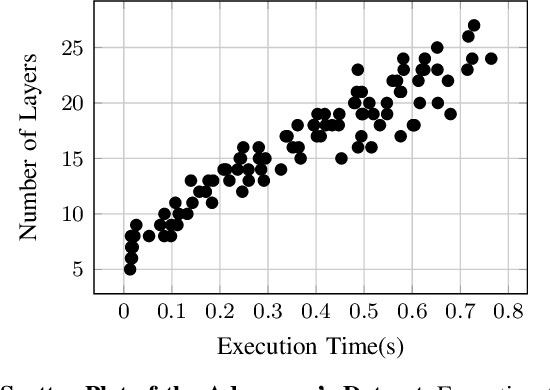

Deep learning is gaining importance in many applications and Cloud infrastructures are being advocated for this computational paradigm. However, there is a security issue which is yet to be addressed. An adversary can extract the neural network architecture for commercial gain. Given the architecture, an adversary can further infer the regularization hyperparameter, input data and generate effective transferable adversarial examples to evade classifiers. We observe that neural networks are vulnerable to timing side channel attacks as the total execution time of the network is dependent on the network depth due to the sequential computation of the layers. In this paper, black box neural network extraction attack by exploiting the timing side channels to infer the depth of the network has been proposed. The proposed approach is independent of the neural network architecture and scalable. Reconstructing substitute architectures with similar functionality as the target model is a search problem. The depth inferred from exploiting the timing side channel reduces the search space. Further, reinforcement learning with knowledge distillation is used to efficiently search for the optimal substitute architecture in the complex yet reduced search space. We evaluate our attack on VGG architectures on CIFAR10 dataset and reconstruct substitute models with test accuracy close to the target models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge