Stabilizing Generative Adversarial Network Training: A Survey

Paper and Code

Sep 30, 2019

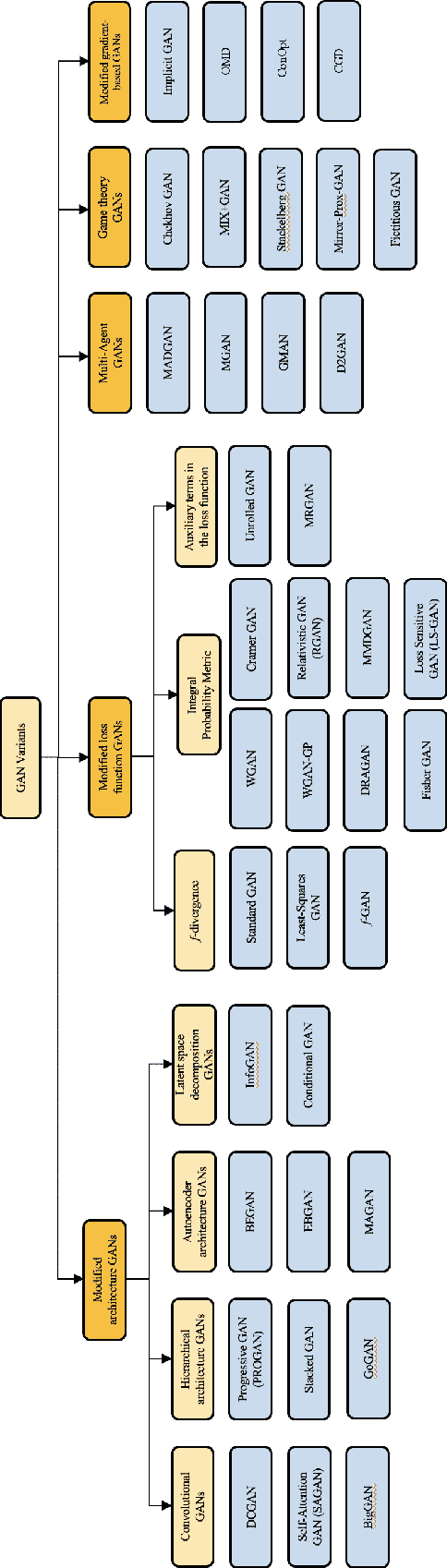

Generative Adversarial Networks (GANs) are a type of Generative Models, which received wide attention for their ability to model complex real-world data. With a considerably large number of potential applications and a promise to alleviate some of the major issues of the field of Artificial Intelligence, recent years have seen rapid progress in the field of GANs. Despite the relative success, the process of training GANs remains challenging, suffering from instability issues such as mode collapse and vanishing gradients. This survey summarizes the approaches and methods employed for the purpose of stabilizing GAN training procedure. We provide a brief explanation behind the workings of GANs and categorize employed stabilization techniques outlining each of the approaches. Finally, we discuss the advantages and disadvantages of each of the methods, offering a comparative summary of the literature on stabilizing GAN training procedure.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge