Stability and Generalization Capabilities of Message Passing Graph Neural Networks

Paper and Code

Feb 04, 2022

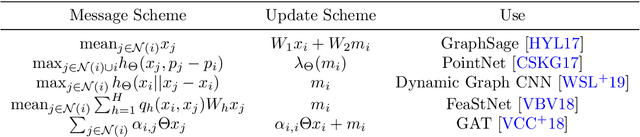

Message passing neural networks (MPNN) have seen a steep rise in popularity since their introduction as generalizations of convolutional neural networks to graph structured data, and are now considered state-of-the-art tools for solving a large variety of graph-focused problems. We study the generalization capabilities of MPNNs in graph classification. We assume that graphs of different classes are sampled from different random graph models. Based on this data distribution, we derive a non-asymptotic bound on the generalization gap between the empirical and statistical loss, that decreases to zero as the graphs become larger. This is proven by showing that a MPNN, applied on a graph, approximates the MPNN applied on the geometric model that the graph discretizes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge