SSTN: Self-Supervised Domain Adaptation Thermal Object Detection for Autonomous Driving

Paper and Code

Mar 04, 2021

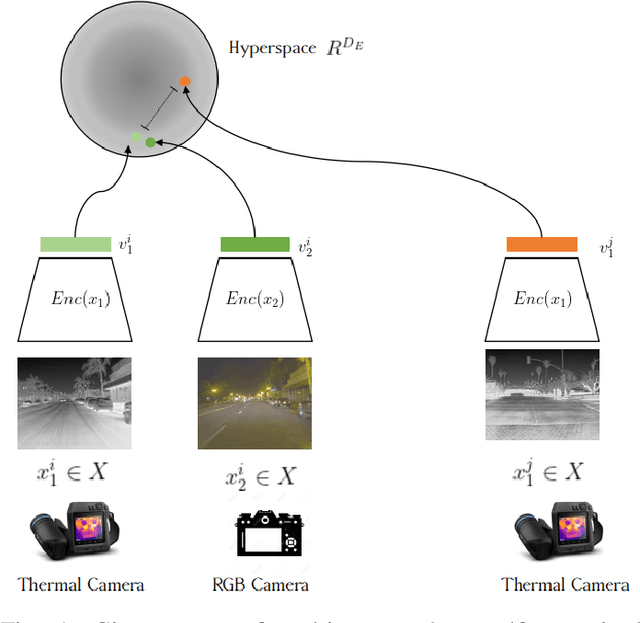

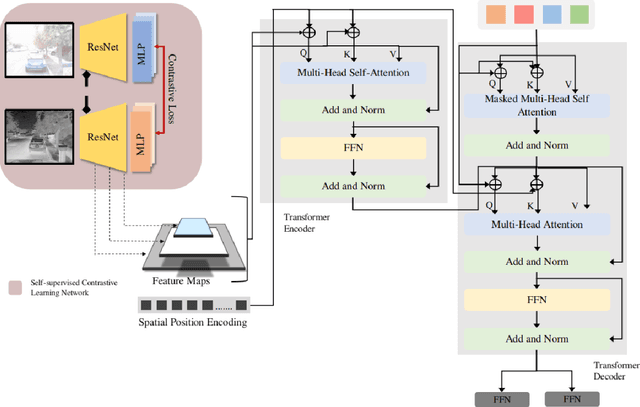

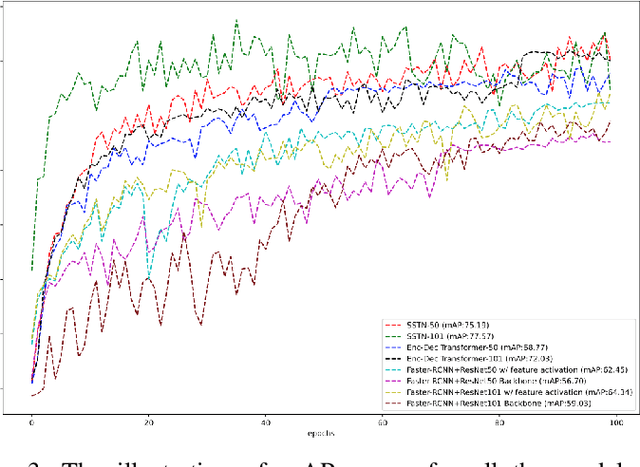

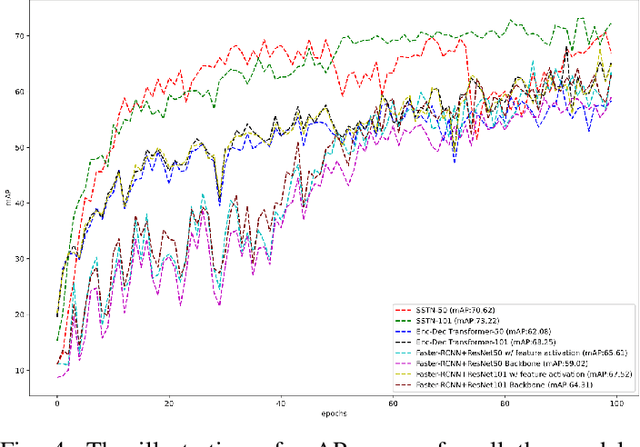

The sensibility and sensitivity of the environment play a decisive role in the safe and secure operation of autonomous vehicles. This perception of the surrounding is way similar to human visual representation. The human's brain perceives the environment by utilizing different sensory channels and develop a view-invariant representation model. Keeping in this context, different exteroceptive sensors are deployed on the autonomous vehicle for perceiving the environment. The most common exteroceptive sensors are camera, Lidar and radar for autonomous vehicle's perception. Despite being these sensors have illustrated their benefit in the visible spectrum domain yet in the adverse weather conditions, for instance, at night, they have limited operation capability, which may lead to fatal accidents. In this work, we explore thermal object detection to model a view-invariant model representation by employing the self-supervised contrastive learning approach. For this purpose, we have proposed a deep neural network Self Supervised Thermal Network (SSTN) for learning the feature embedding to maximize the information between visible and infrared spectrum domain by contrastive learning, and later employing these learned feature representation for the thermal object detection using multi-scale encoder-decoder transformer network. The proposed method is extensively evaluated on the two publicly available datasets: the FLIR-ADAS dataset and the KAIST Multi-Spectral dataset. The experimental results illustrate the efficacy of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge