Spontaneous Emerging Preference in Two-tower Language Model

Paper and Code

Oct 13, 2022

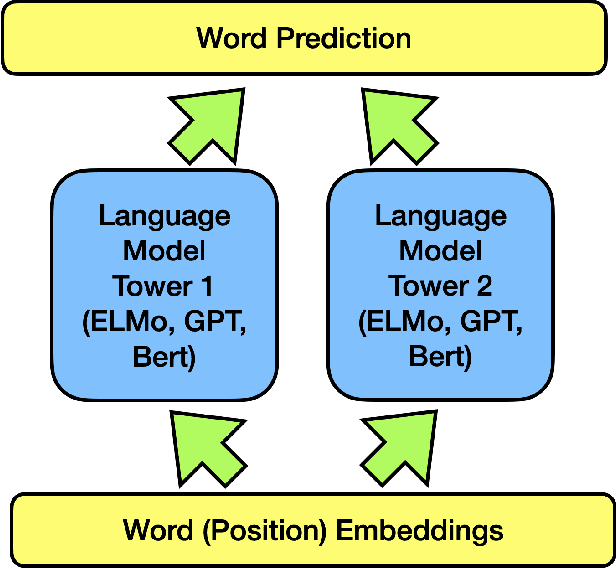

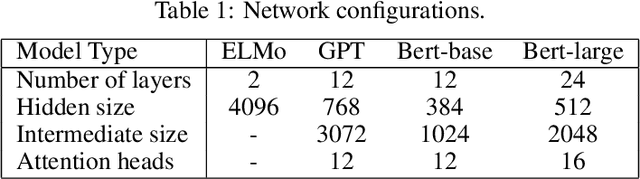

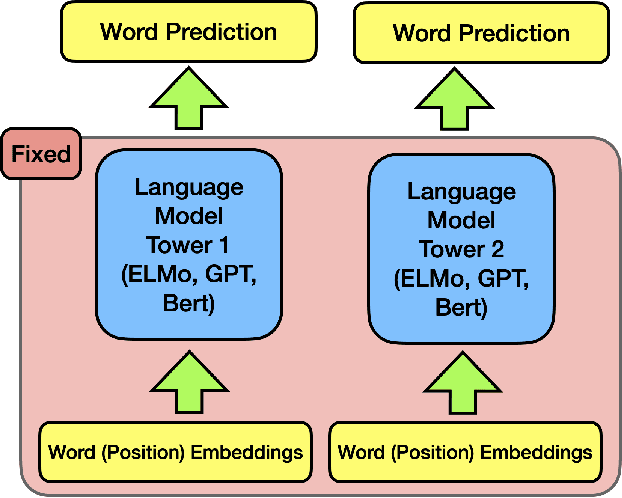

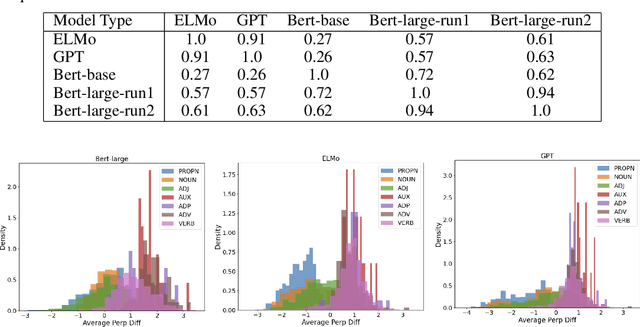

The ever-growing size of the foundation language model has brought significant performance gains in various types of downstream tasks. With the existence of side-effects brought about by the large size of the foundation language model such as deployment cost, availability issues, and environmental cost, there is some interest in exploring other possible directions, such as a divide-and-conquer scheme. In this paper, we are asking a basic question: are language processes naturally dividable? We study this problem with a simple two-tower language model setting, where two language models with identical configurations are trained side-by-side cooperatively. With this setting, we discover the spontaneous emerging preference phenomenon, where some of the tokens are consistently better predicted by one tower while others by another tower. This phenomenon is qualitatively stable, regardless of model configuration and type, suggesting this as an intrinsic property of natural language. This study suggests that interesting properties of natural language are still waiting to be discovered, which may aid the future development of natural language processing techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge