Spiking Inception Module for Multi-layer Unsupervised Spiking Neural Networks

Paper and Code

Feb 14, 2020

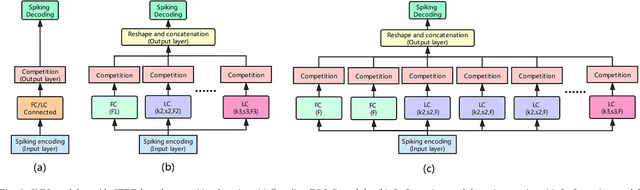

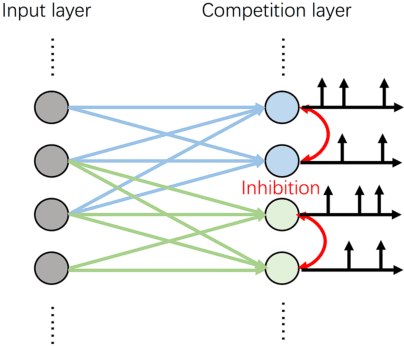

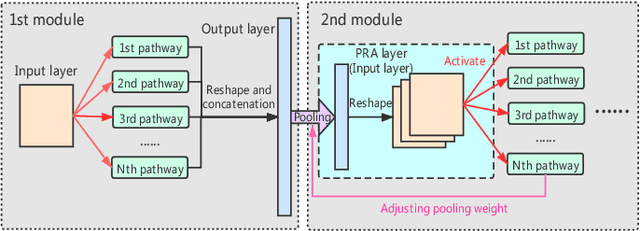

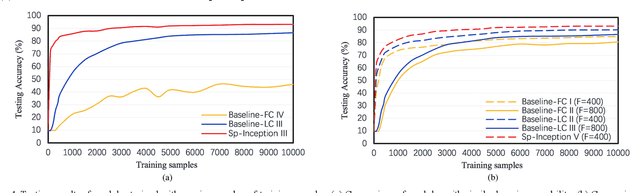

Spiking Neural Network (SNN), as a brain-inspired approach, is attracting attentions due to its potential to produce ultra-high-energy-efficient hardware. Competitive learning based on Spike-Timing-Dependent Plasticity (STDP) is a popular method to train unsupervised SNN. However, previous unsupervised SNNs trained through this method are limited to shallow networks with only one learnable layer and can't achieve satisfactory results when compared with multi-layer SNNs. In this paper, we ease this limitation by: 1)We propose Spiking Inception (Sp-Inception) module, inspired by the Inception module in Artificial Neural Network (ANN) literature. This module is trained through STDP- based competitive learning and outperforms baseline modules on learning capability, learning efficiency, and robustness; 2)We propose Pooling-Reshape-Activate (PRA) layer to make Sp-Inception module stackable; 3)We stack multiple Sp-Inception modules to construct multi-layer SNNs. Our method greatly exceeds baseline methods on image classification tasks and reaches state-of-the-art results on MNIST dataset among existing unsupervised SNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge