Speech Recognition Front End Without Information Loss

Paper and Code

Mar 30, 2015

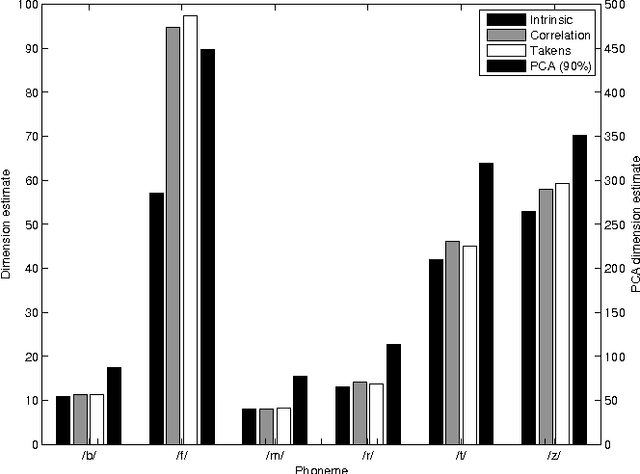

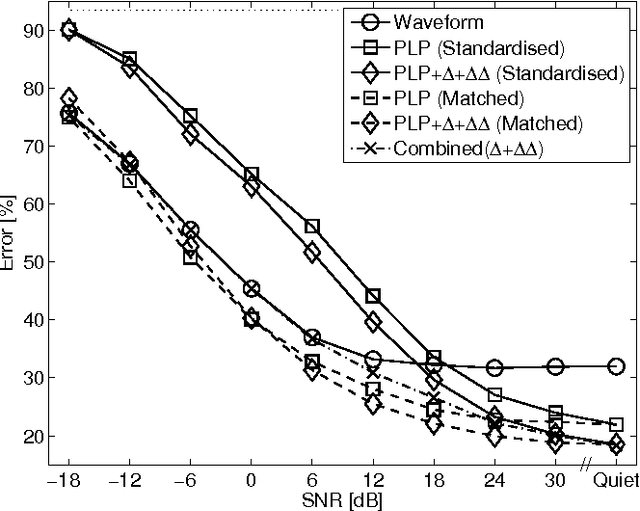

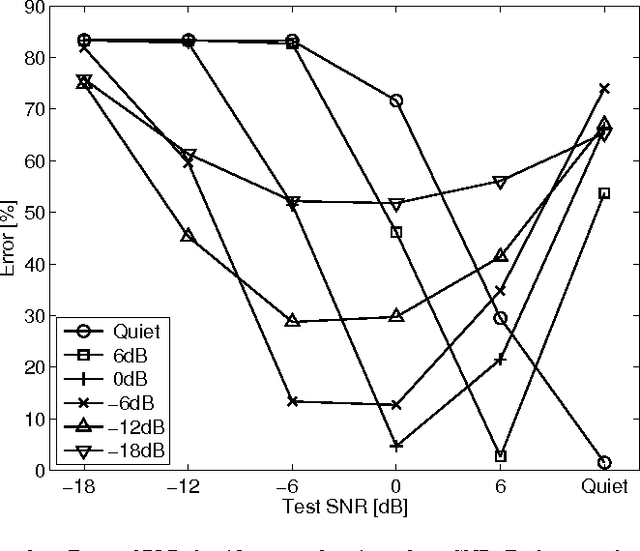

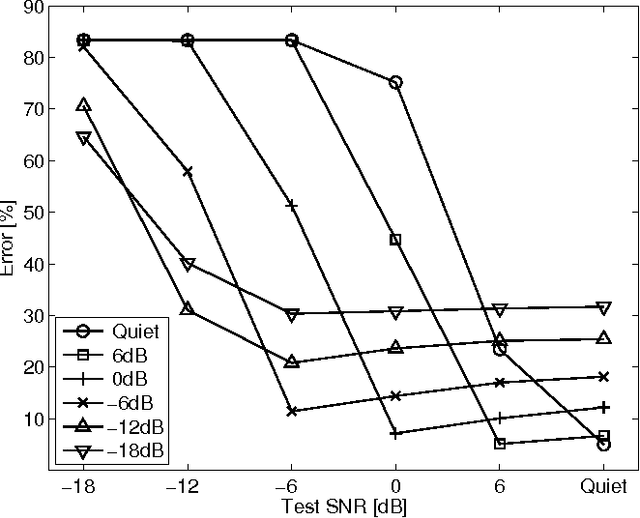

Speech representation and modelling in high-dimensional spaces of acoustic waveforms, or a linear transformation thereof, is investigated with the aim of improving the robustness of automatic speech recognition to additive noise. The motivation behind this approach is twofold: (i) the information in acoustic waveforms that is usually removed in the process of extracting low-dimensional features might aid robust recognition by virtue of structured redundancy analogous to channel coding, (ii) linear feature domains allow for exact noise adaptation, as opposed to representations that involve non-linear processing which makes noise adaptation challenging. Thus, we develop a generative framework for phoneme modelling in high-dimensional linear feature domains, and use it in phoneme classification and recognition tasks. Results show that classification and recognition in this framework perform better than analogous PLP and MFCC classifiers below 18 dB SNR. A combination of the high-dimensional and MFCC features at the likelihood level performs uniformly better than either of the individual representations across all noise levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge