Spectral Signatures in Backdoor Attacks

Paper and Code

Nov 01, 2018

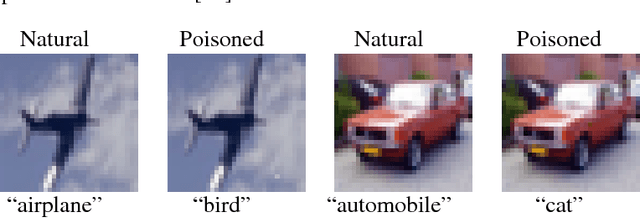

A recent line of work has uncovered a new form of data poisoning: so-called \emph{backdoor} attacks. These attacks are particularly dangerous because they do not affect a network's behavior on typical, benign data. Rather, the network only deviates from its expected output when triggered by a perturbation planted by an adversary. In this paper, we identify a new property of all known backdoor attacks, which we call \emph{spectral signatures}. This property allows us to utilize tools from robust statistics to thwart the attacks. We demonstrate the efficacy of these signatures in detecting and removing poisoned examples on real image sets and state of the art neural network architectures. We believe that understanding spectral signatures is a crucial first step towards designing ML systems secure against such backdoor attacks

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge