Spectral Leakage and Rethinking the Kernel Size in CNNs

Paper and Code

Jan 25, 2021

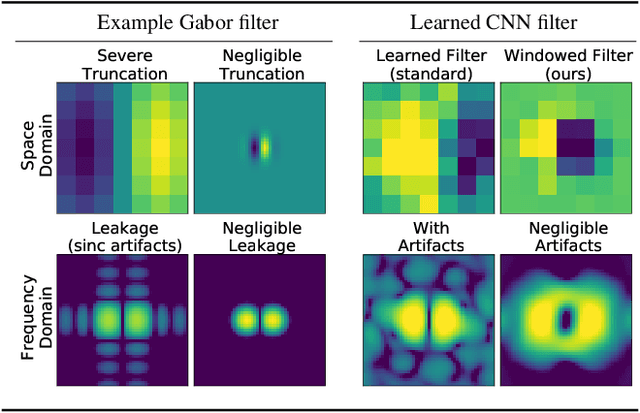

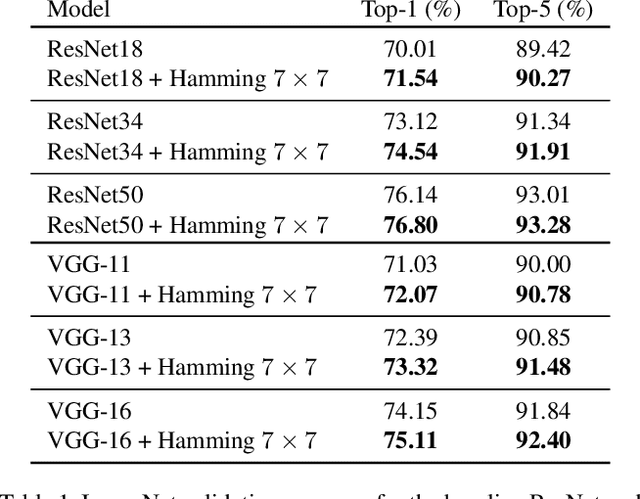

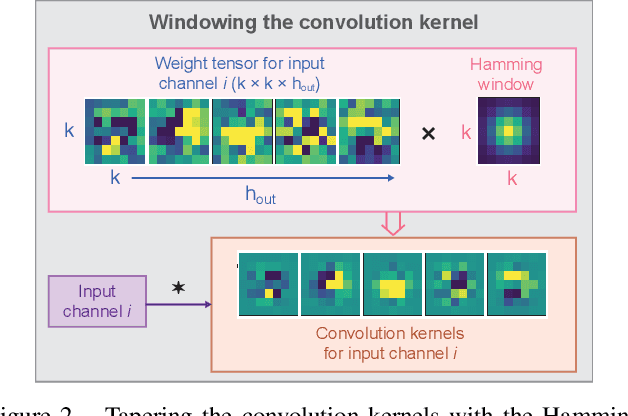

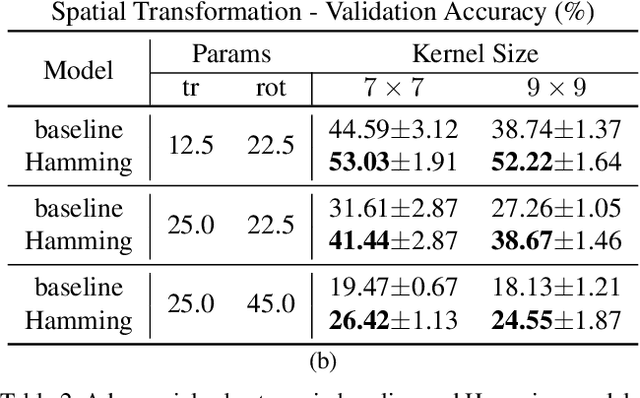

Convolutional layers in CNNs implement linear filters which decompose the input into different frequency bands. However, most modern architectures neglect standard principles of filter design when optimizing their model choices regarding the size and shape of the convolutional kernel. In this work, we consider the well-known problem of spectral leakage caused by windowing artifacts in filtering operations in the context of CNNs. We show that the small size of CNN kernels make them susceptible to spectral leakage, which may induce performance-degrading artifacts. To address this issue, we propose the use of larger kernel sizes along with the Hamming window function to alleviate leakage in CNN architectures. We demonstrate improved classification accuracy over baselines with conventional $3\times 3$ kernels, on multiple benchmark datasets including Fashion-MNIST, CIFAR-10, CIFAR-100 and ImageNet, via the simple use of a standard window function in convolutional layers. Finally, we show that CNNs employing the Hamming window display increased robustness against certain types of adversarial attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge