Specification-Driven Neural Network Reduction for Scalable Formal Verification

Paper and Code

May 03, 2023

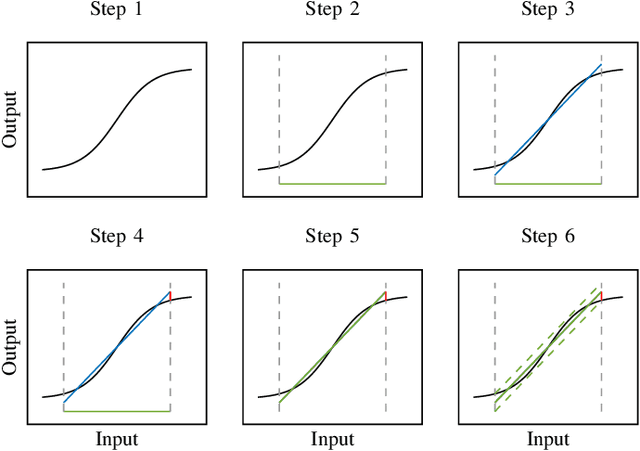

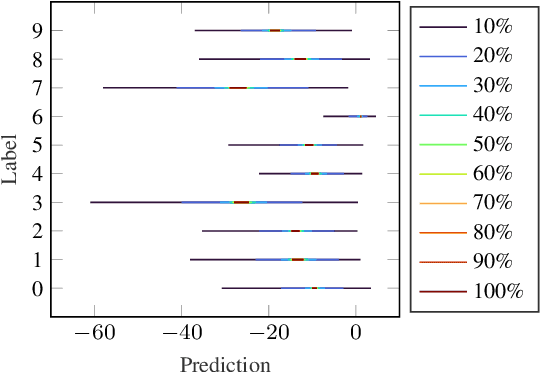

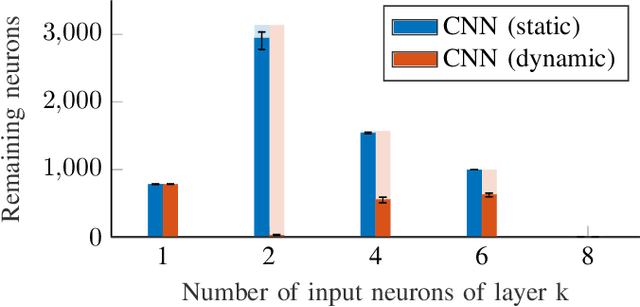

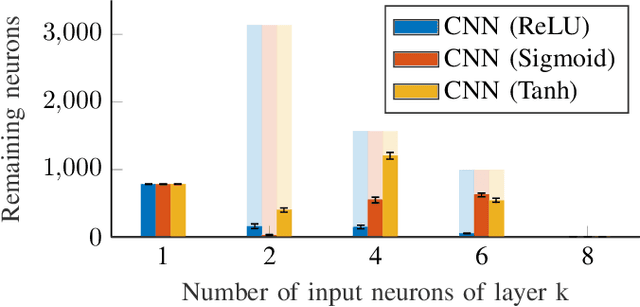

Formal verification of neural networks is essential before their deployment in safety-critical settings. However, existing methods for formally verifying neural networks are not yet scalable enough to handle practical problems that involve a large number of neurons. In this work, we propose a novel approach to address this challenge: A conservative neural network reduction approach that ensures that the verification of the reduced network implies the verification of the original network. Our approach constructs the reduction on-the-fly, while simultaneously verifying the original network and its specifications. The reduction merges all neurons of a nonlinear layer with similar outputs and is applicable to neural networks with any type of activation function such as ReLU, sigmoid, and tanh. Our evaluation shows that our approach can reduce a network to less than 5% of the number of neurons and thus to a similar degree the verification time is reduced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge