SPCL: A New Framework for Domain Adaptive Semantic Segmentation via Semantic Prototype-based Contrastive Learning

Paper and Code

Nov 24, 2021

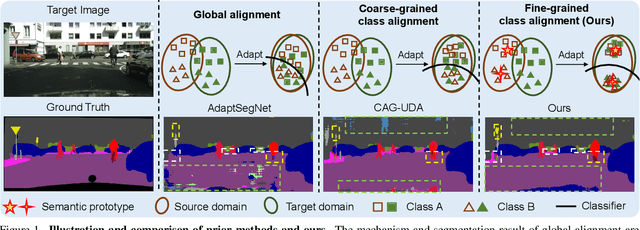

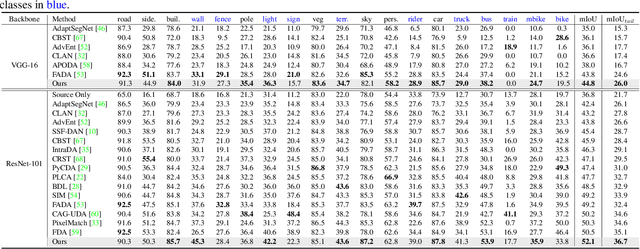

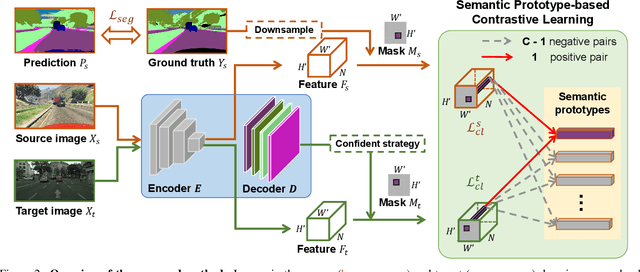

Although there is significant progress in supervised semantic segmentation, it remains challenging to deploy the segmentation models to unseen domains due to domain biases. Domain adaptation can help in this regard by transferring knowledge from a labeled source domain to an unlabeled target domain. Previous methods typically attempt to perform the adaptation on global features, however, the local semantic affiliations accounting for each pixel in the feature space are often ignored, resulting in less discriminability. To solve this issue, we propose a novel semantic prototype-based contrastive learning framework for fine-grained class alignment. Specifically, the semantic prototypes provide supervisory signals for per-pixel discriminative representation learning and each pixel of source and target domains in the feature space is required to reflect the content of the corresponding semantic prototype. In this way, our framework is able to explicitly make intra-class pixel representations closer and inter-class pixel representations further apart to improve the robustness of the segmentation model as well as alleviate the domain shift problem. Our method is easy to implement and attains superior results compared to state-of-the-art approaches, as is demonstrated with a number of experiments. The code is publicly available at [this https URL](https://github.com/BinhuiXie/SPCL).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge