Spatial Contrastive Learning for Few-Shot Classification

Paper and Code

Dec 26, 2020

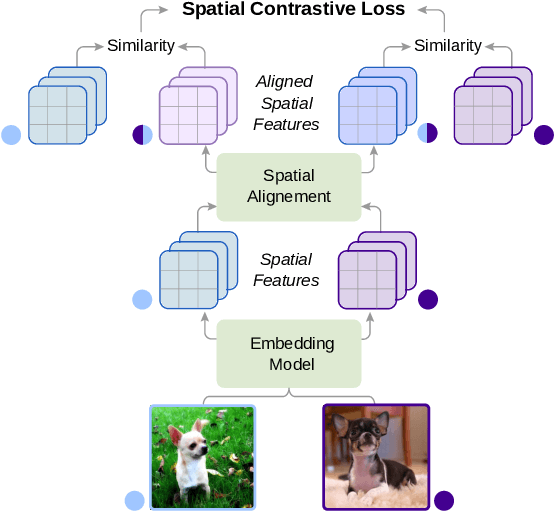

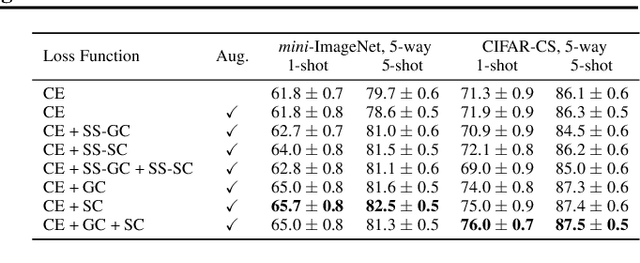

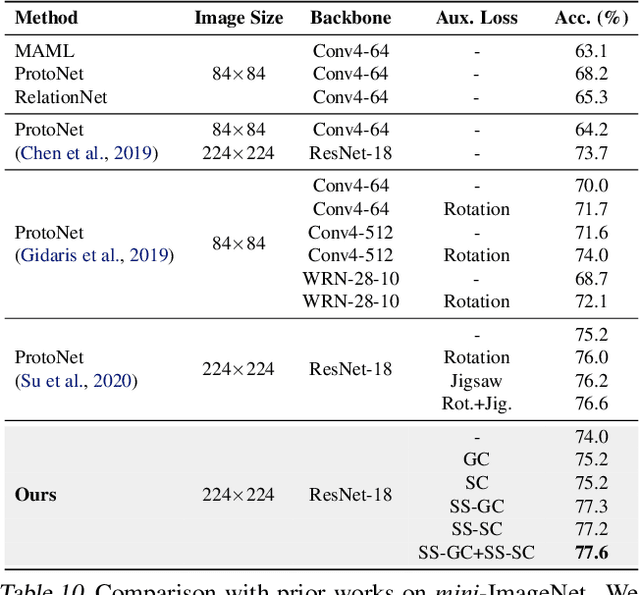

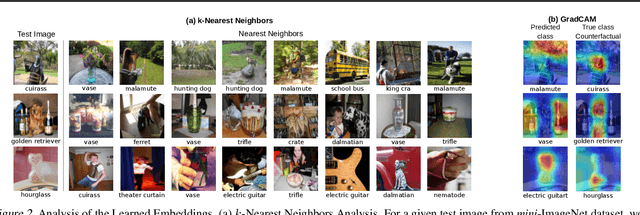

Existing few-shot classification methods rely to some degree on the cross-entropy (CE) loss to learn transferable representations that facilitate the test time adaptation to unseen classes with limited data. However, the CE loss has several shortcomings, e.g., inducing representations with excessive discrimination towards seen classes, which reduces their transferability to unseen classes and results in sub-optimal generalization. In this work, we explore contrastive learning as an additional auxiliary training objective, acting as a data-dependent regularizer to promote more general and transferable features. Instead of using the standard contrastive objective, which suppresses local discriminative features, we propose a novel attention-based spatial contrastive objective to learn locally discriminative and class-agnostic features. With extensive experiments, we show that the proposed method outperforms state-of-the-art approaches, confirming the importance of learning good and transferable embeddings for few-shot learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge