Sparsified Privacy-Masking for Communication-Efficient and Privacy-Preserving Federated Learning

Paper and Code

Aug 01, 2020

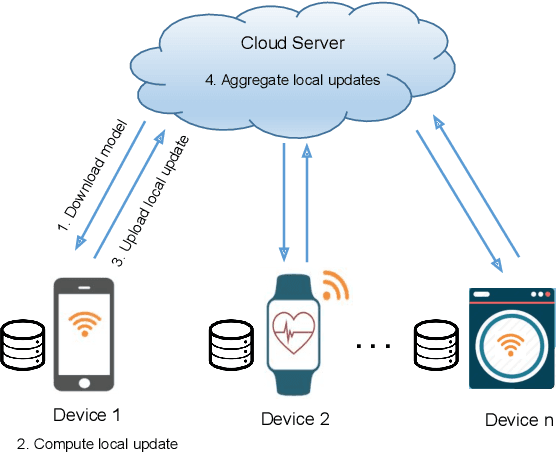

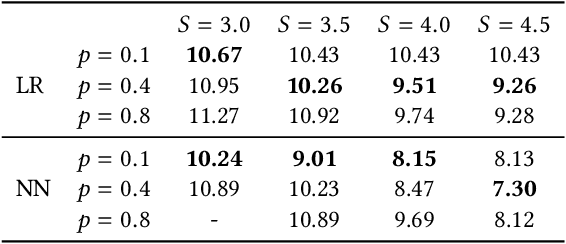

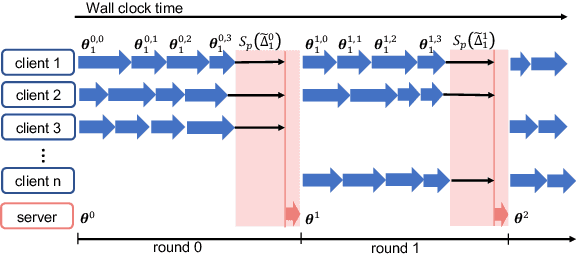

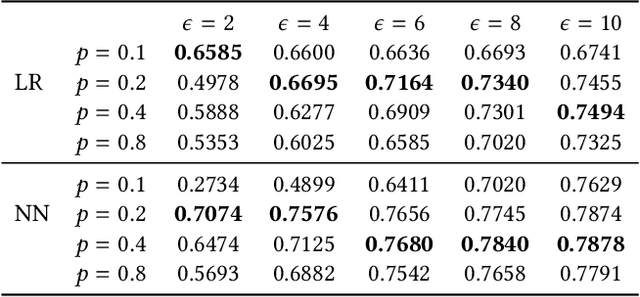

Federated learning has received significant interests recently due to its capability of learning a shared machine learning model across smart devices without accessing their private data in the era of Internet of things. This paper jointly considers two critical issues of federated learning -- data privacy and communication efficiency -- and develops a communication-efficient and differentially-private federated learning scheme called CPFed. The main challenge in addressing both issues together lies in the fact that data compression techniques often lead to an increased number of training iterations required for achieving some desired training loss due to the compression errors, while the differential privacy guarantee usually deteriorates with respect to the number of training iterations. To reconcile this dilemma, we propose to use sparsified privacy-masking that first adds random noise to the model update and then applies unbiased random sparsifier before uploading the model update at each device in federated learning. By using sparsified privacy-masking, our proposed CPFed scheme can achieve high communication efficiency and strong data privacy guarantee at the same time while preserving model accuracy. We provide an explict end-to-end privacy guarantee of CPFed using zero-concentrated differential privacy and give its theoretical convergence rates for both convex and non-convex models. Through extensive numerical experiments on real-world datasets, we demonstrate the effectiveness and efficiency of our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge