Sparse Normal Means Estimation with Sublinear Communication

Paper and Code

Feb 05, 2021

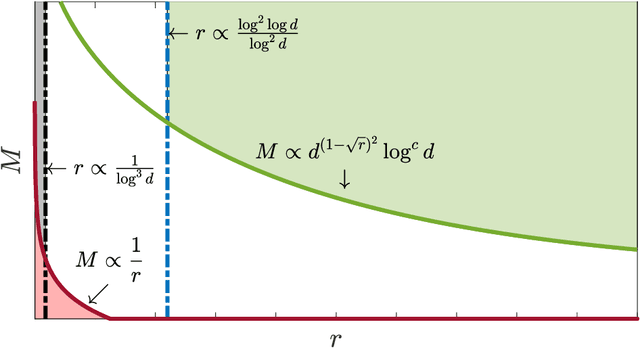

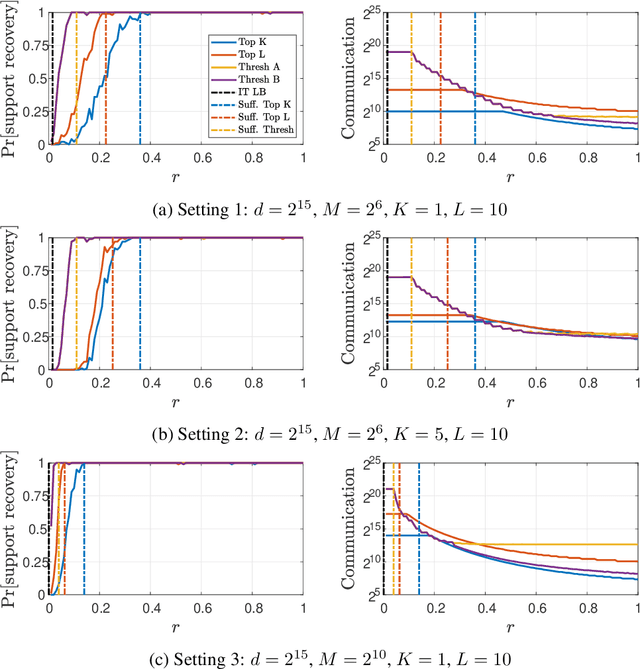

We consider the problem of sparse normal means estimation in a distributed setting with communication constraints. We assume there are $M$ machines, each holding a $d$-dimensional observation of a $K$-sparse vector $\mu$ corrupted by additive Gaussian noise. A central fusion machine is connected to the $M$ machines in a star topology, and its goal is to estimate the vector $\mu$ with a low communication budget. Previous works have shown that to achieve the centralized minimax rate for the $\ell_2$ risk, the total communication must be high - at least linear in the dimension $d$. This phenomenon occurs, however, at very weak signals. We show that once the signal-to-noise ratio (SNR) is slightly higher, the support of $\mu$ can be correctly recovered with much less communication. Specifically, we present two algorithms for the distributed sparse normal means problem, and prove that above a certain SNR threshold, with high probability, they recover the correct support with total communication that is sublinear in the dimension $d$. Furthermore, the communication decreases exponentially as a function of signal strength. If in addition $KM\ll d$, then with an additional round of sublinear communication, our algorithms achieve the centralized rate for the $\ell_2$ risk. Finally, we present simulations that illustrate the performance of our algorithms in different parameter regimes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge