Source-free unsupervised domain adaptation for cross-modality abdominal multi-organ segmentation

Paper and Code

Nov 30, 2021

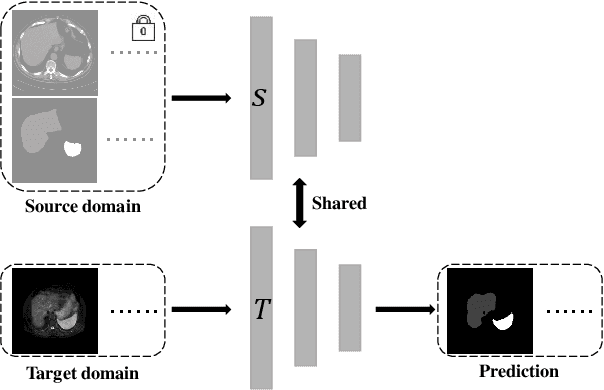

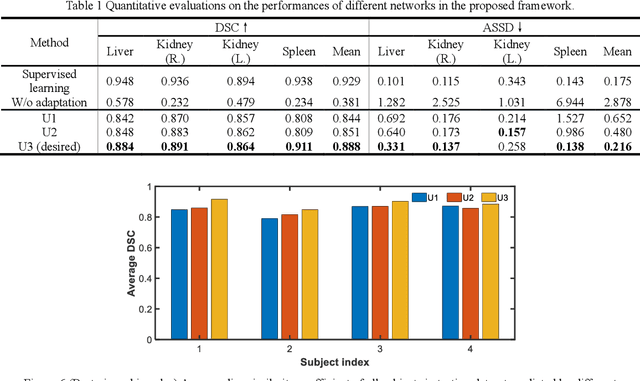

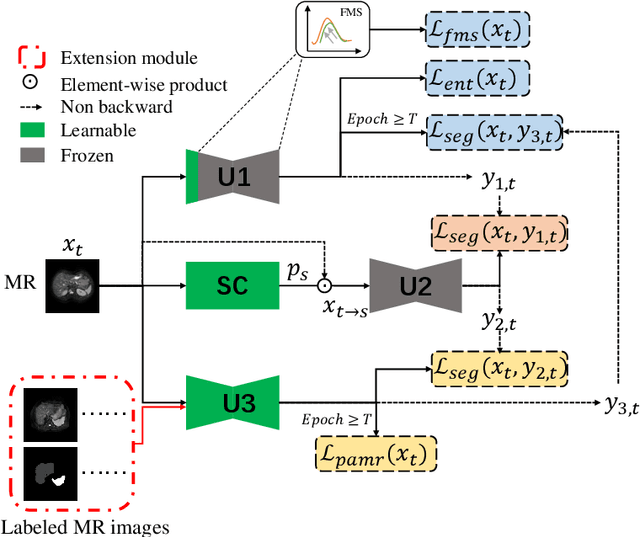

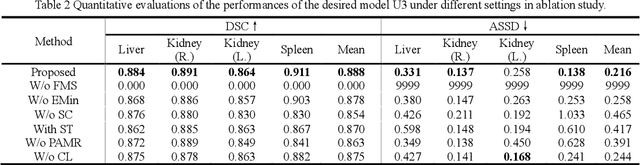

It is valuable to achieve domain adaptation to transfer the learned knowledge from the source labeled CT dataset to the target unlabeled MR dataset for abdominal multi-organ segmentation. Meanwhile, it is highly desirable to avoid high annotation cost of target dataset and protect privacy of source dataset. Therefore, we propose an effective source-free unsupervised domain adaptation method for cross-modality abdominal multi-organ segmentation without accessing the source dataset. The process of the proposed framework includes two stages. At the first stage, the feature map statistics loss is used to align the distributions of the source and target features in the top segmentation network, and entropy minimization loss is used to encourage high confidence segmentations. The pseudo-labels outputted from the top segmentation network is used to guide the style compensation network to generate source-like images. The pseudo-labels outputted from the middle segmentation network is used to supervise the learning of the desired model (the bottom segmentation network). At the second stage, the circular learning and the pixel-adaptive mask refinement are used to further improve the performance of the desired model. With this approach, we achieve satisfactory performances on the segmentations of liver, right kidney, left kidney, and spleen with the dice similarity coefficients of 0.884, 0.891, 0.864, and 0.911, respectively. In addition, the proposed approach can be easily extended to the situation when there exists target annotation data. The performance improves from 0.888 to 0.922 in average dice similarity coefficient, close to the supervised learning (0.929), with only one labeled MR volume.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge