Source Camera Attribution from Strongly Stabilized Videos

Paper and Code

Nov 26, 2019

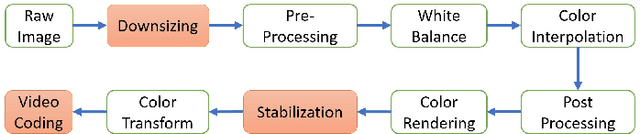

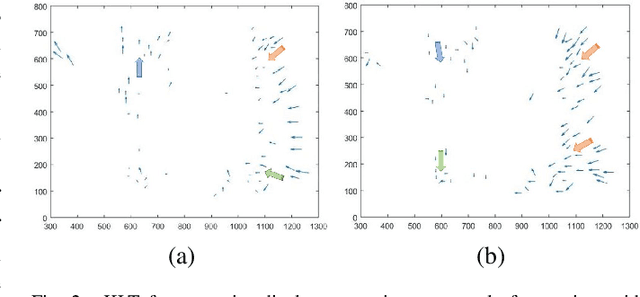

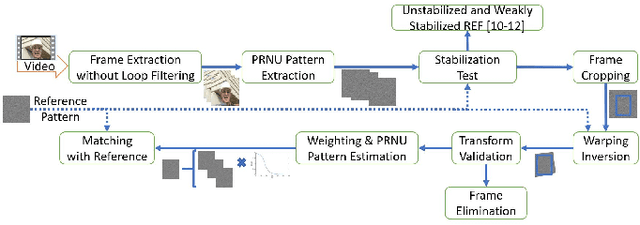

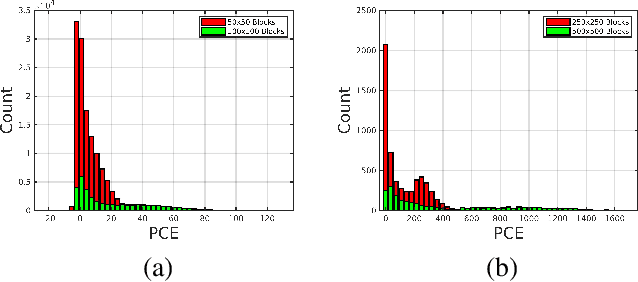

The in-camera image stabilization technology deployed by most cameras today poses one of the most significant challenges to photo-response non-uniformity based source camera attribution from videos. When performed digitally, stabilization involves cropping, warping, and inpainting of video frames to eliminate unwanted camera motion. Hence, successful attribution requires the inversion of these transformations in a blind manner. To address this challenge, we introduce a source camera verification method for videos that takes into account the spatially variant nature of stabilization transformations. Our method identifies transformations at a sub-frame level and incorporates a number of constraints to validate their correctness. The method also adopts a holistic approach in countering disruptive effects of other video generation steps, such as video coding and downsizing, for more reliable attribution. Tests performed on a public dataset of stabilized videos show that the proposed method improves attribution rate over existing methods by 17-19\% without a significant impact on false attribution rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge