Solving Non-Convex Non-Differentiable Min-Max Games using Proximal Gradient Method

Paper and Code

Mar 18, 2020

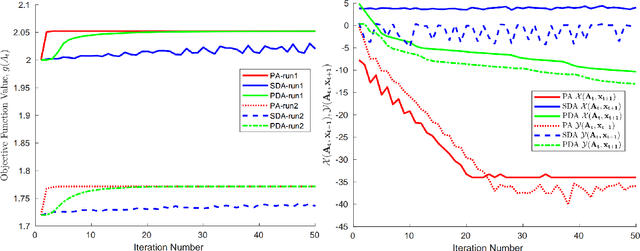

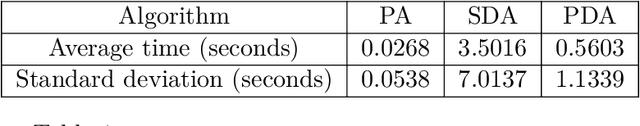

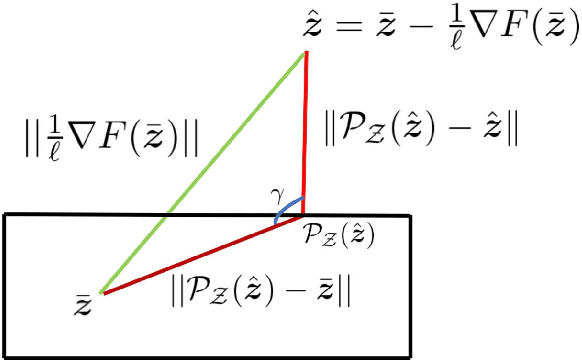

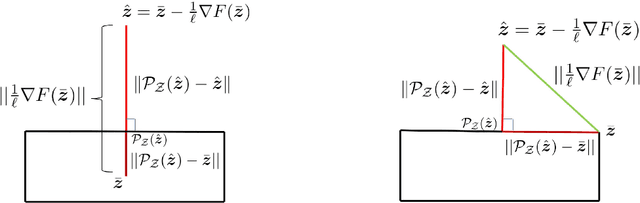

Min-max saddle point games appear in a wide range of applications in machine leaning and signal processing. Despite their wide applicability, theoretical studies are mostly limited to the special convex-concave structure. While some recent works generalized these results to special smooth non-convex cases, our understanding of non-smooth scenarios is still limited. In this work, we study special form of non-smooth min-max games when the objective function is (strongly) convex with respect to one of the player's decision variable. We show that a simple multi-step proximal gradient descent-ascent algorithm converges to $\epsilon$-first-order Nash equilibrium of the min-max game with the number of gradient evaluations being polynomial in $1/\epsilon$. We will also show that our notion of stationarity is stronger than existing ones in the literature. Finally, we evaluate the performance of the proposed algorithm through adversarial attack on a LASSO estimator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge