Solving Forward and Inverse Problems Using Autoencoders

Paper and Code

Jan 06, 2020

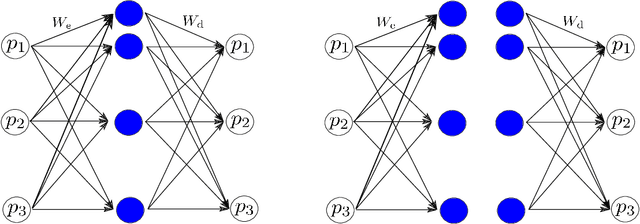

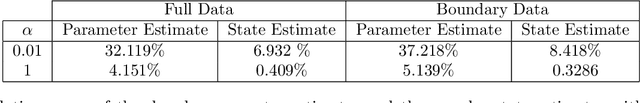

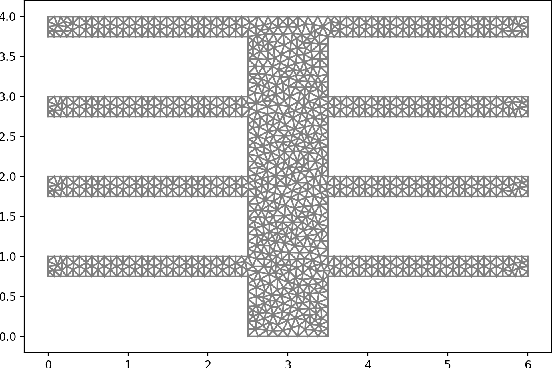

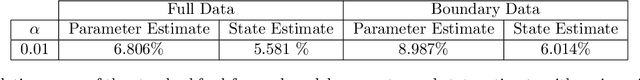

This work develops a model-aware autoencoder networks as a new method for solving scientific forward and inverse problems. Autoencoders are unsupervised neural networks that are able to learn new representations of data through appropriately selected architecture and regularization. The resulting mappings to and from the latent representation can be used to encode and decode the data. In our work, we set the data space to be the parameter space of a parameter of interest we wish to invert for. Further, as a way to encode the underlying physical model into the autoencoder, we enforce the latent space of an autoencoder to be the space of observations of physically-governed phenomena. In doing so, we leverage the well known capability of a deep neural network as a universal function operator to simultaneously obtain both the parameter-to-observation and observation-to-parameter map. The results suggest that this simultaneous learning interacts synergistically to improve the the inversion capability of the autoencoder.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge