SoftEdge: Regularizing Graph Classification with Random Soft Edges

Paper and Code

Apr 21, 2022

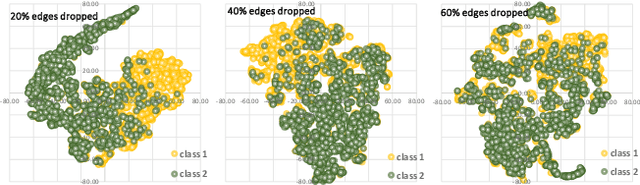

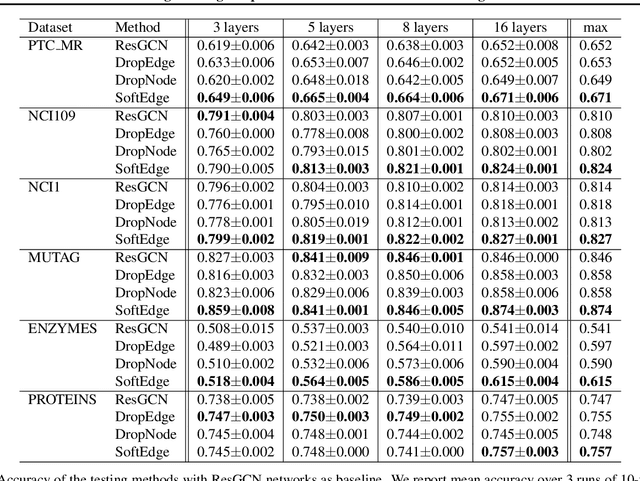

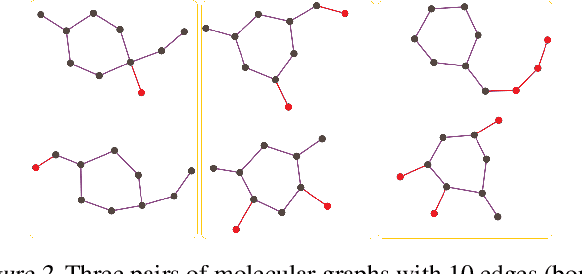

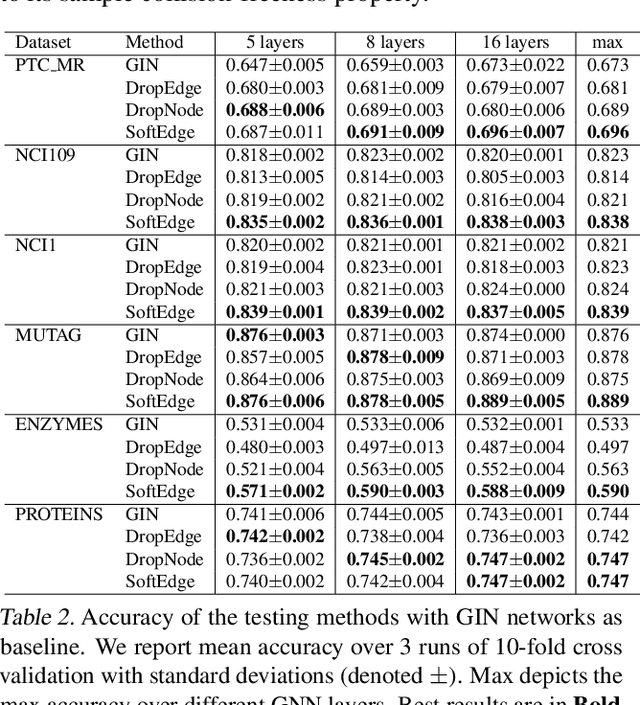

Graph data augmentation plays a vital role in regularizing Graph Neural Networks (GNNs), which leverage information exchange along edges in graphs, in the form of message passing, for learning. Due to their effectiveness, simple edge and node manipulations (e.g., addition and deletion) have been widely used in graph augmentation. In this paper, we identify a limitation in such a common augmentation technique. That is, simple edge and node manipulations can create graphs with an identical structure or indistinguishable structures to message passing GNNs but of conflict labels, leading to the sample collision issue and thus the degradation of model performance. To address this problem, we propose SoftEdge, which assigns random weights to a portion of the edges of a given graph to construct dynamic neighborhoods over the graph. We prove that SoftEdge creates collision-free augmented graphs. We also show that this simple method obtains superior accuracy to popular node and edge manipulation approaches and notable resilience to the accuracy degradation with the GNN depth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge