SOCIALGYM: A Framework for Benchmarking Social Robot Navigation

Paper and Code

Sep 22, 2021

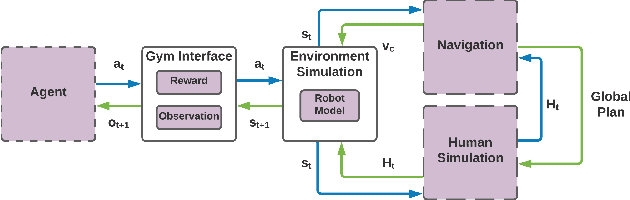

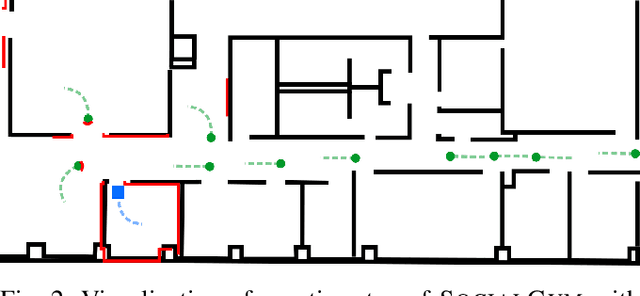

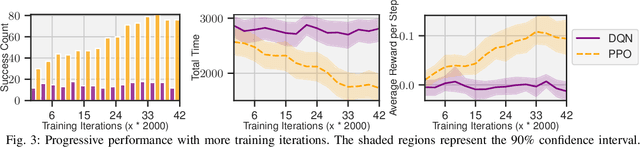

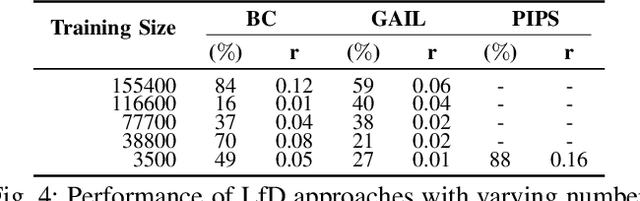

Robots moving safely and in a socially compliant manner in dynamic human environments is an essential benchmark for long-term robot autonomy. However, it is not feasible to learn and benchmark social navigation behaviors entirely in the real world, as learning is data-intensive, and it is challenging to make safety guarantees during training. Therefore, simulation-based benchmarks that provide abstractions for social navigation are required. A framework for these benchmarks would need to support a wide variety of learning approaches, be extensible to the broad range of social navigation scenarios, and abstract away the perception problem to focus on social navigation explicitly. While there have been many proposed solutions, including high fidelity 3D simulators and grid world approximations, no existing solution satisfies all of the aforementioned properties for learning and evaluating social navigation behaviors. In this work, we propose SOCIALGYM, a lightweight 2D simulation environment for robot social navigation designed with extensibility in mind, and a benchmark scenario built on SOCIALGYM. Further, we present benchmark results that compare and contrast human-engineered and model-based learning approaches to a suite of off-the-shelf Learning from Demonstration (LfD) and Reinforcement Learning (RL) approaches applied to social robot navigation. These results demonstrate the data efficiency, task performance, social compliance, and environment transfer capabilities for each of the policies evaluated to provide a solid grounding for future social navigation research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge