Smooth-Swap: A Simple Enhancement for Face-Swapping with Smoothness

Paper and Code

Dec 11, 2021

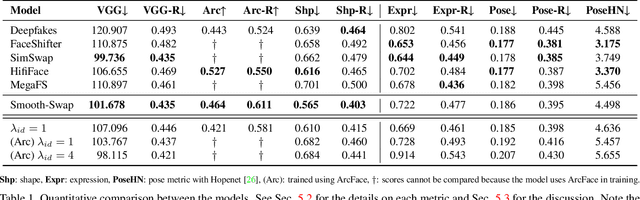

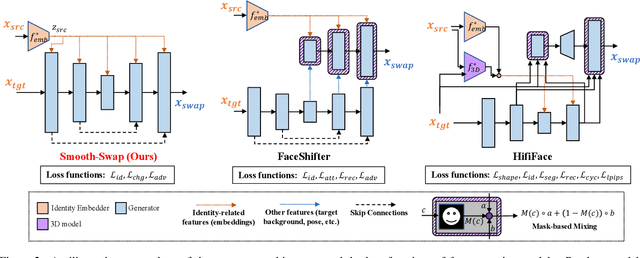

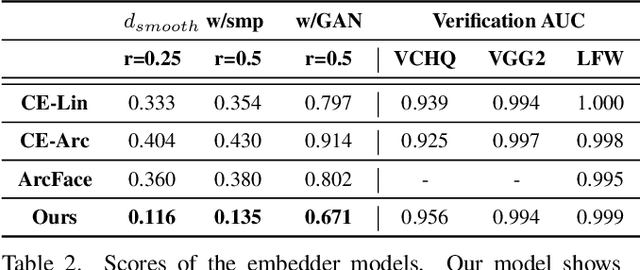

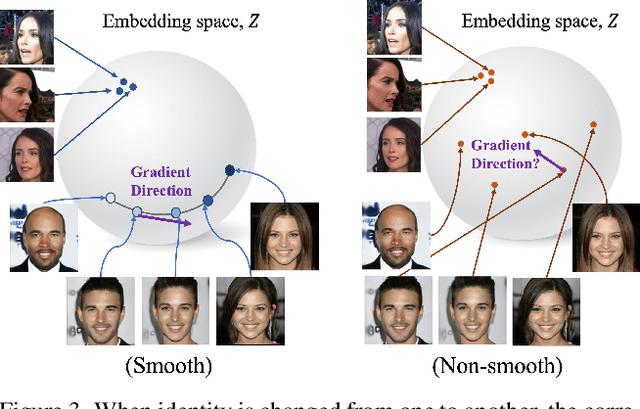

In recent years, face-swapping models have progressed in generation quality and drawn attention for their applications in privacy protection and entertainment. However, their complex architectures and loss functions often require careful tuning for successful training. In this paper, we propose a new face-swapping model called `Smooth-Swap', which focuses on deriving the smoothness of the identity embedding instead of employing complex handcrafted designs. We postulate that the gist of the difficulty in face-swapping is unstable gradients and it can be resolved by a smooth identity embedder. Smooth-swap adopts an embedder trained using supervised contrastive learning, where we find its improved smoothness allows faster and stable training even with a simple U-Net-based generator and three basic loss functions. Extensive experiments on face-swapping benchmarks (FFHQ, FaceForensics++) and face images in the wild show that our model is also quantitatively and qualitatively comparable or even superior to existing methods in terms of identity change.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge