Slow Learners are Fast

Paper and Code

Nov 03, 2009

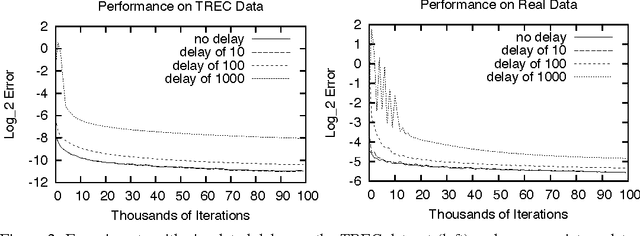

Online learning algorithms have impressive convergence properties when it comes to risk minimization and convex games on very large problems. However, they are inherently sequential in their design which prevents them from taking advantage of modern multi-core architectures. In this paper we prove that online learning with delayed updates converges well, thereby facilitating parallel online learning.

* Extended version of conference paper - NIPS 2009

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge