SinReQ: Generalized Sinusoidal Regularization for Automatic Low-Bitwidth Deep Quantized Training

Paper and Code

May 04, 2019

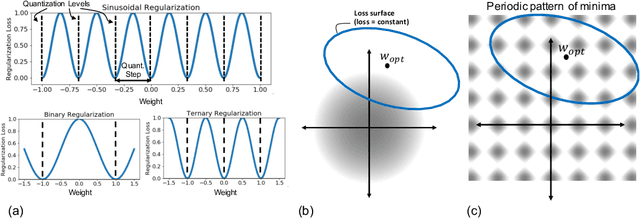

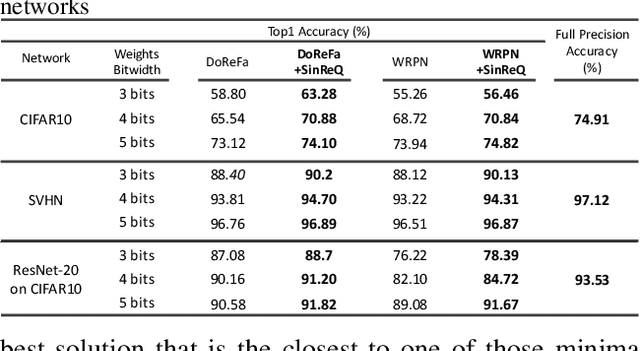

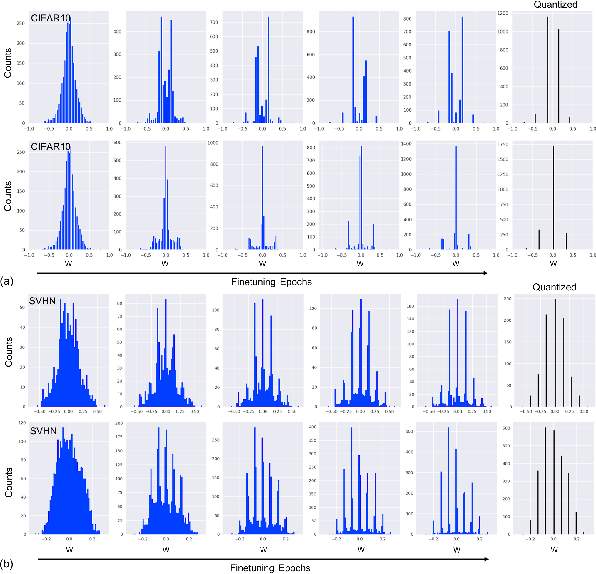

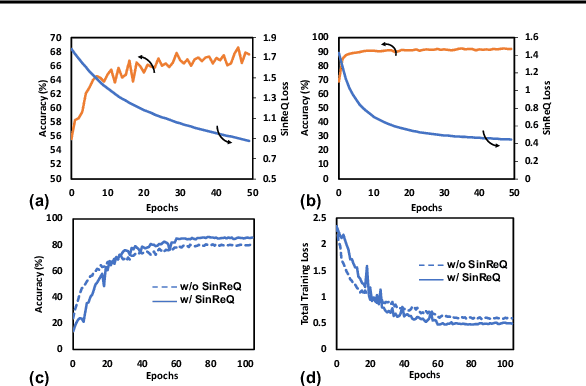

Quantization of neural networks offers significant promise in reducing their compute and storage cost. Albeit alluring, without domain experts to come up with special handcrafted optimization techniques or ad-hoc manipulation of the original network architecture, deep quantization (below 8 bits) results in unrecoverable accuracy gap between the quantized model and the full-precision counterpart. We propose a novel sinusoidal regularization, dubbed SinReQ, for low precision deep quantized training. The proposed method is aimed at automatically yielding semi-quantized weights at pre-defined target bitwidths during conventional training. The proposed regularization is realized by adding a periodic function (sinusoidal regularizer) to the original objective function. We exploit the inherent periodicity with a desired convexity profile in sinusoidal functions to automatically propel weights towards target quantization levels during conventional training. Our method combines generality by providing the flexibility for arbitrary-bit quantization, and customization by optimizing different layer-wise regularizers simultaneously. Preliminary results for experiments on CIFAR10, SVHN show that integrating SinReQ within the training algorithm achieves 2.82%, and 2.11% accuracy improvements to DoReFa (Zhou et al., 2016), and WRPN (Mishra et al., 2018) methods respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge