Simultaneous Learning of the Inputs and Parameters in Neural Collaborative Filtering

Paper and Code

Mar 14, 2022

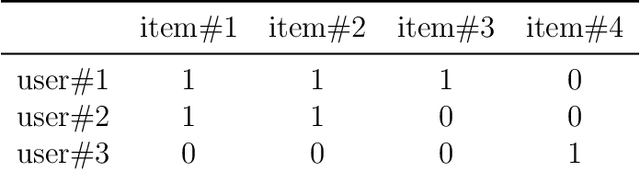

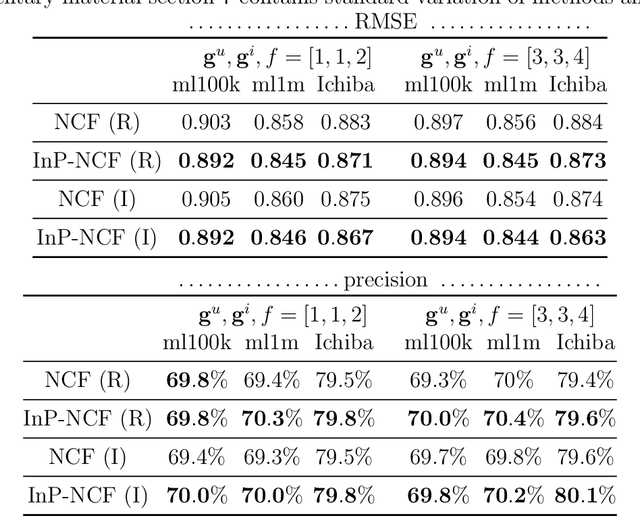

Neural network-based collaborative filtering systems focus on designing network architectures to learn better representations while fixing the input to the user/item interaction vectors and/or ID. In this paper, we first show that the non-zero elements of the inputs are learnable parameters that determine the weights in combining the user/item embeddings, and fixing them limits the power of the models in learning the representations. Then, we propose to learn the value of the non-zero elements of the inputs jointly with the neural network parameters. We analyze the model complexity and the empirical risk of our approach and prove that learning the input leads to a better generalization bound. Our experiments on several real-world datasets show that our method outperforms the state-of-the-art methods, even using shallow network structures with a smaller number of layers and parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge