Simulating Liquids with Graph Networks

Paper and Code

Mar 14, 2022

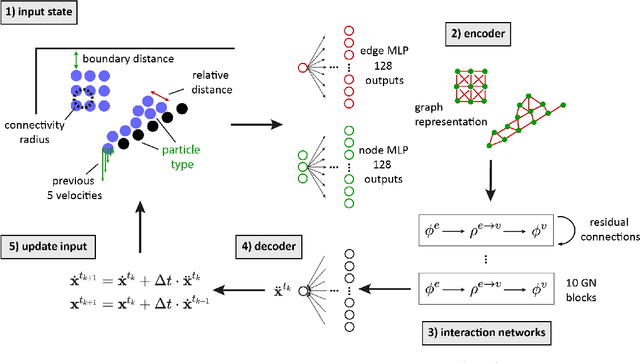

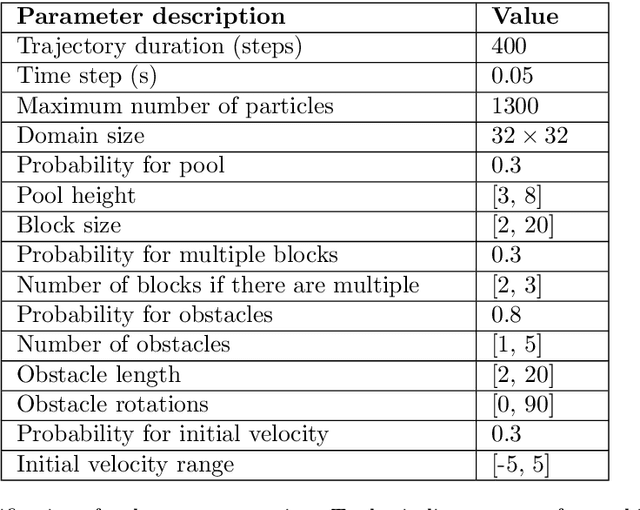

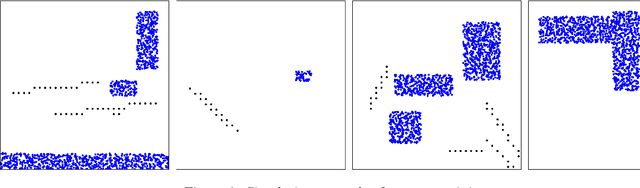

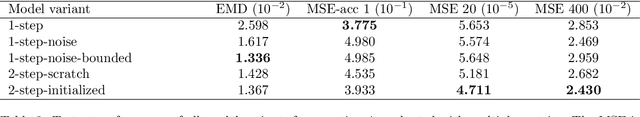

Simulating complex dynamics like fluids with traditional simulators is computationally challenging. Deep learning models have been proposed as an efficient alternative, extending or replacing parts of traditional simulators. We investigate graph neural networks (GNNs) for learning fluid dynamics and find that their generalization capability is more limited than previous works would suggest. We also challenge the current practice of adding random noise to the network inputs in order to improve its generalization capability and simulation stability. We find that inserting the real data distribution, e.g. by unrolling multiple simulation steps, improves accuracy and that hiding all domain-specific features from the learning model improves generalization. Our results indicate that learning models, such as GNNs, fail to learn the exact underlying dynamics unless the training set is devoid of any other problem-specific correlations that could be used as shortcuts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge