Simple and Near-Optimal MAP Inference for Nonsymmetric DPPs

Paper and Code

Feb 10, 2021

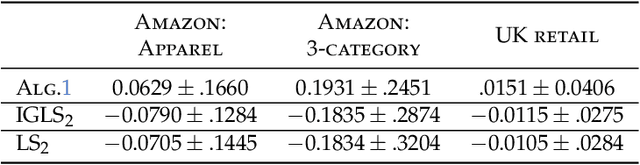

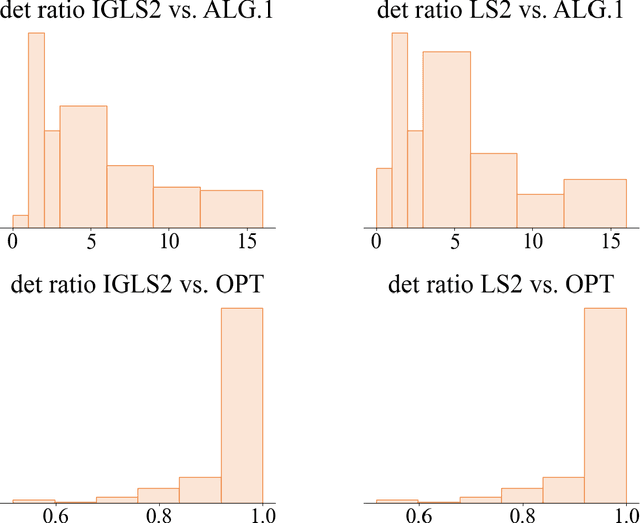

Determinantal point processes (DPPs) are widely popular probabilistic models used in machine learning to capture diversity in random subsets of items. While traditional DPPs are defined by a symmetric kernel matrix, recent work has shown a significant increase in the modeling power and applicability of models defined by nonsymmetric kernels, where the model can capture interactions that go beyond diversity. We study the problem of maximum a posteriori (MAP) inference for determinantal point processes defined by a nonsymmetric positive semidefinite matrix (NDPPs), where the goal is to find the maximum $k\times k$ principal minor of the kernel matrix $L$. We obtain the first multiplicative approximation guarantee for this problem using local search, a method that has been previously applied to symmetric DPPs. Our approximation factor of $k^{O(k)}$ is nearly tight, and we show theoretically and experimentally that it compares favorably to the state-of-the-art methods for this problem that are based on greedy maximization. The main new insight enabling our improved approximation factor is that we allow local search to update up to two elements of the solution in each iteration, and we show this is necessary to have any multiplicative approximation guarantee.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge