Sign language segmentation with temporal convolutional networks

Paper and Code

Nov 25, 2020

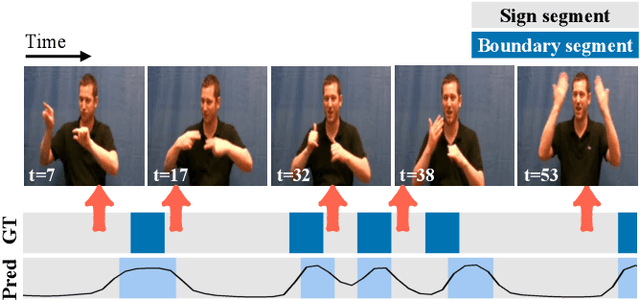

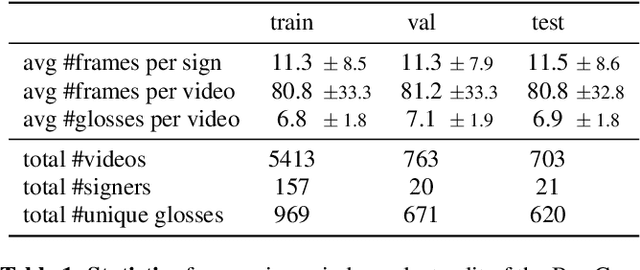

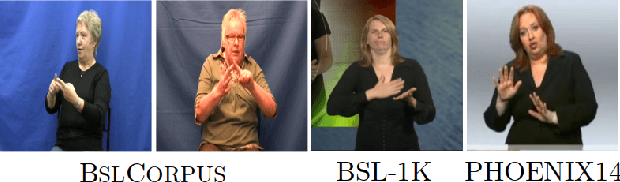

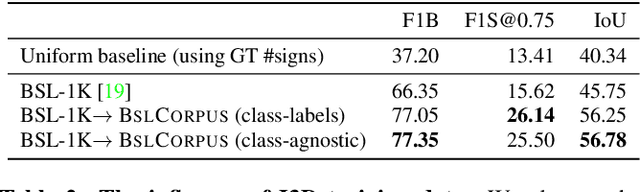

The objective of this work is to determine the location of temporal boundaries between signs in continuous sign language videos. Our approach employs 3D convolutional neural network representations with iterative temporal segment refinement to resolve ambiguities between sign boundary cues. We demonstrate the effectiveness of our approach on the BSLCORPUS, PHOENIX14 and BSL-1K datasets, showing considerable improvement over the prior state of the art and the ability to generalise to new signers, languages and domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge