Shape Bias and Robustness Evaluation via Cue Decomposition for Image Classification and Segmentation

Paper and Code

Mar 16, 2025

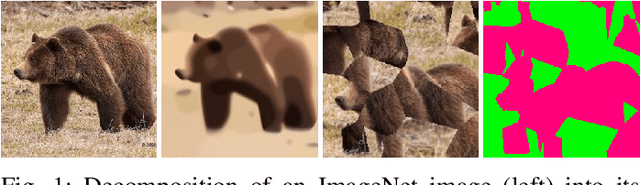

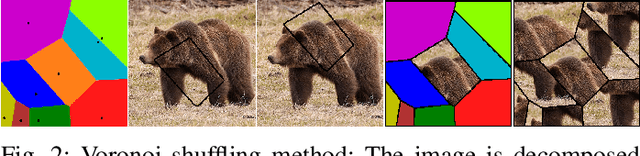

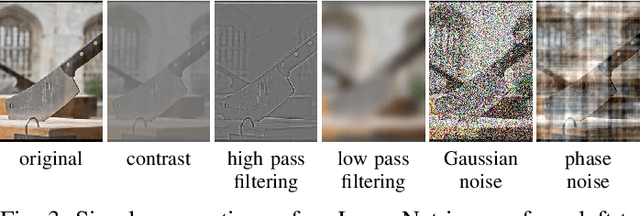

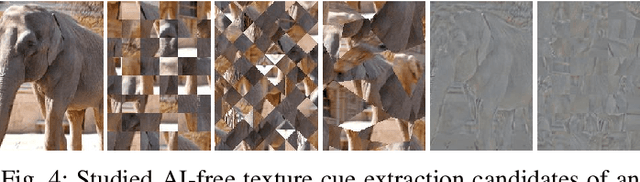

Previous works studied how deep neural networks (DNNs) perceive image content in terms of their biases towards different image cues, such as texture and shape. Previous methods to measure shape and texture biases are typically style-transfer-based and limited to DNNs for image classification. In this work, we provide a new evaluation procedure consisting of 1) a cue-decomposition method that comprises two AI-free data pre-processing methods extracting shape and texture cues, respectively, and 2) a novel cue-decomposition shape bias evaluation metric that leverages the cue-decomposition data. For application purposes we introduce a corresponding cue-decomposition robustness metric that allows for the estimation of the robustness of a DNN w.r.t. image corruptions. In our numerical experiments, our findings for biases in image classification DNNs align with those of previous evaluation metrics. However, our cue-decomposition robustness metric shows superior results in terms of estimating the robustness of DNNs. Furthermore, our results for DNNs on the semantic segmentation datasets Cityscapes and ADE20k for the first time shed light into the biases of semantic segmentation DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge