Semi-Synchronous Federated Learning

Paper and Code

Feb 04, 2021

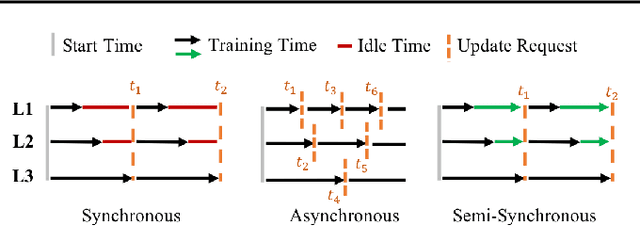

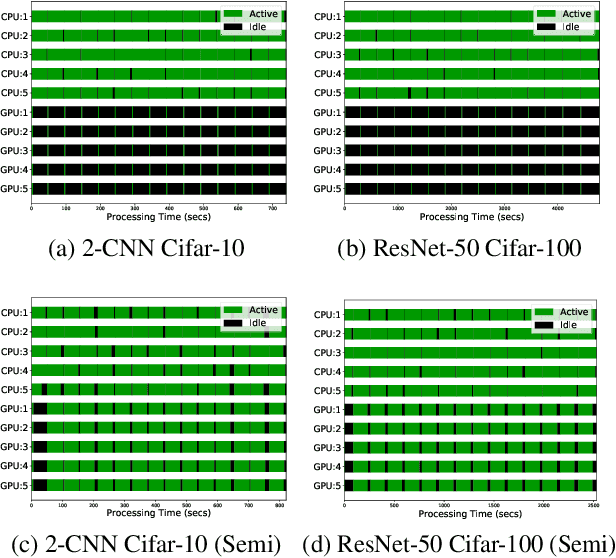

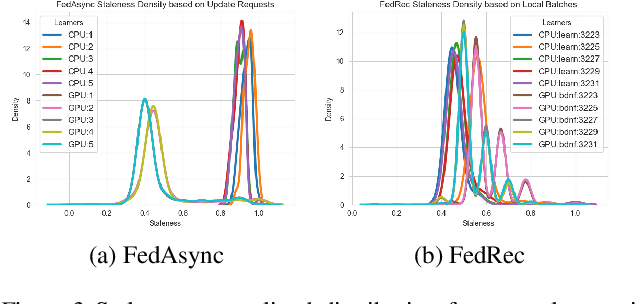

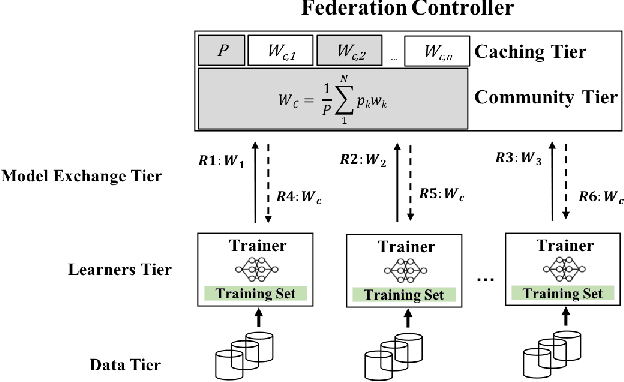

There are situations where data relevant to a machine learning problem are distributed among multiple locations that cannot share the data due to regulatory, competitiveness, or privacy reasons. For example, data present in users' cellphones, manufacturing data of companies in a given industrial sector, or medical records located at different hospitals. Federated Learning (FL) provides an approach to learn a joint model over all the available data across silos. In many cases, participating sites have different data distributions and computational capabilities. In these heterogeneous environments previous approaches exhibit poor performance: synchronous FL protocols are communication efficient, but have slow learning convergence; conversely, asynchronous FL protocols have faster convergence, but at a higher communication cost. Here we introduce a novel Semi-Synchronous Federated Learning protocol that mixes local models periodically with minimal idle time and fast convergence. We show through extensive experiments that our approach significantly outperforms previous work in data and computationally heterogeneous environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge