Semi-Supervised Learning via Swapped Prediction for Communication Signal Recognition

Paper and Code

Nov 14, 2023

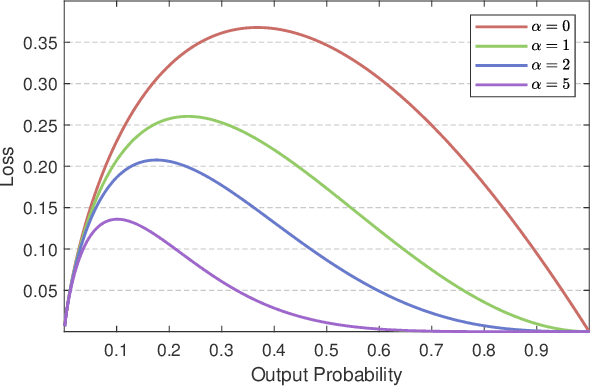

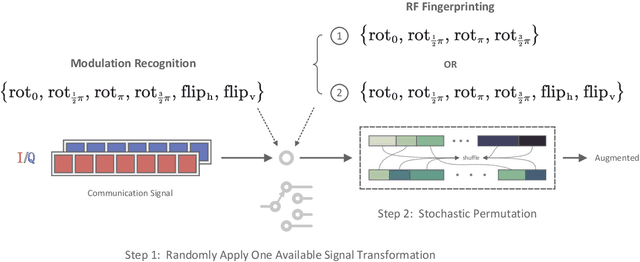

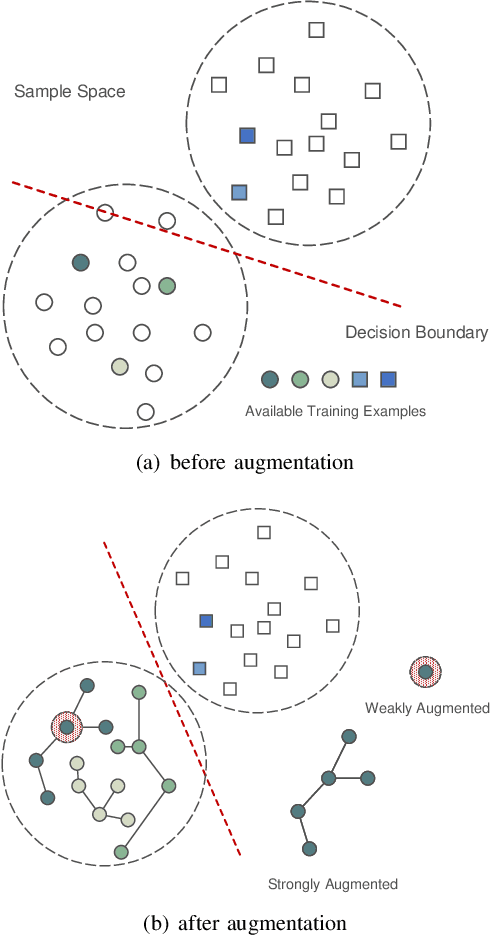

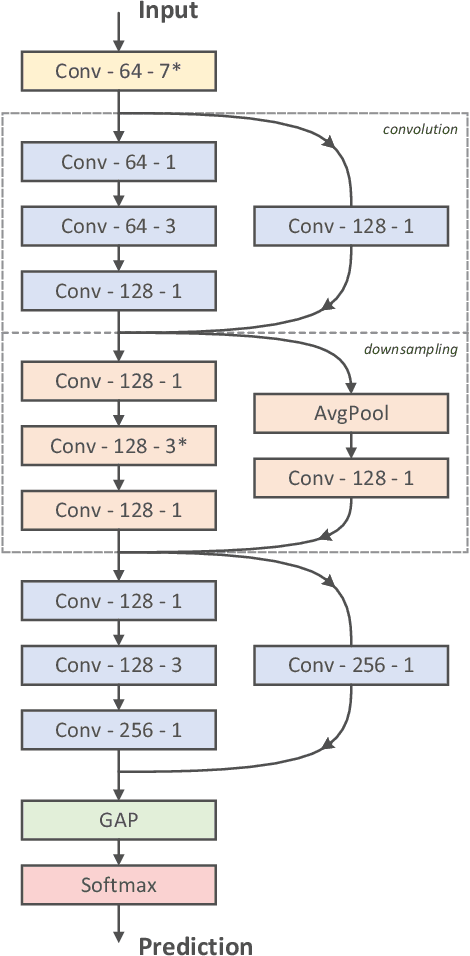

Deep neural networks have been widely used in communication signal recognition and achieved remarkable performance, but this superiority typically depends on using massive examples for supervised learning, whereas training a deep neural network on small datasets with few labels generally falls into overfitting, resulting in degenerated performance. To this end, we develop a semi-supervised learning (SSL) method that effectively utilizes a large collection of more readily available unlabeled signal data to improve generalization. The proposed method relies largely on a novel implementation of consistency-based regularization, termed Swapped Prediction, which leverages strong data augmentation to perturb an unlabeled sample and then encourage its corresponding model prediction to be close to its original, optimized with a scaled cross-entropy loss with swapped symmetry. Extensive experiments indicate that our proposed method can achieve a promising result for deep SSL of communication signal recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge