Semi-supervised Image Attribute Editing using Generative Adversarial Networks

Paper and Code

Jul 03, 2019

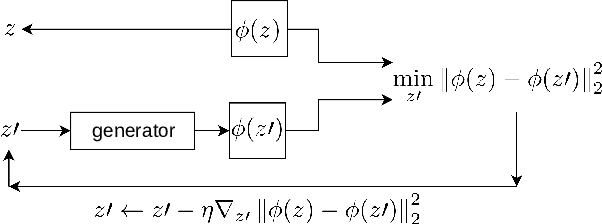

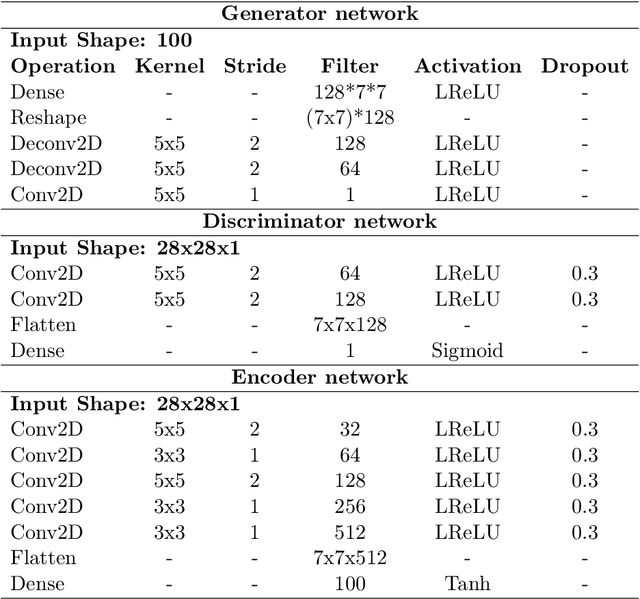

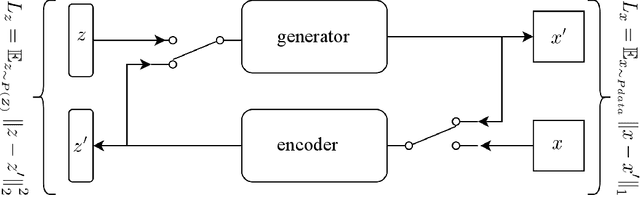

Image attribute editing is a challenging problem that has been recently studied by many researchers using generative networks. The challenge is in the manipulation of selected attributes of images while preserving the other details. The method to achieve this goal is to find an accurate latent vector representation of an image and a direction corresponding to the attribute. Almost all the works in the literature use labeled datasets in a supervised setting for this purpose. In this study, we introduce an architecture called Cyclic Reverse Generator (CRG), which allows learning the inverse function of the generator accurately via an encoder in an unsupervised setting by utilizing cyclic cost minimization. Attribute editing is then performed using the CRG models for finding desired attribute representations in the latent space. In this work, we use two arbitrary reference images, with and without desired attributes, to compute an attribute direction for editing. We show that the proposed approach performs better in terms of image reconstruction compared to the existing end-to-end generative models both quantitatively and qualitatively. We demonstrate state-of-the-art results on both real images and generated images in MNIST and CelebA datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge