Semantic Segmentation via Highly Fused Convolutional Network with Multiple Soft Cost Functions

Paper and Code

Jan 04, 2018

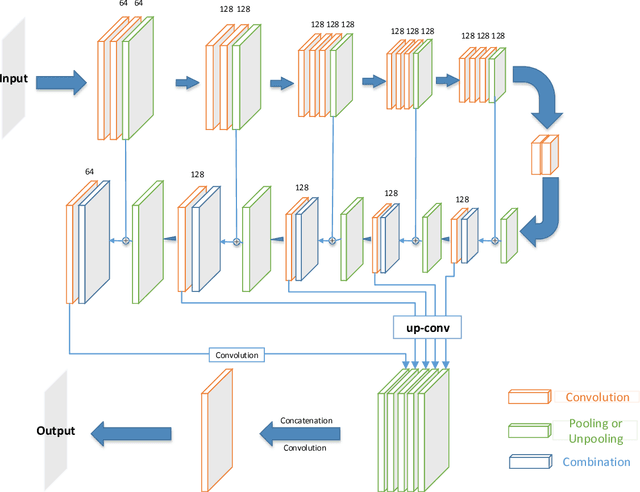

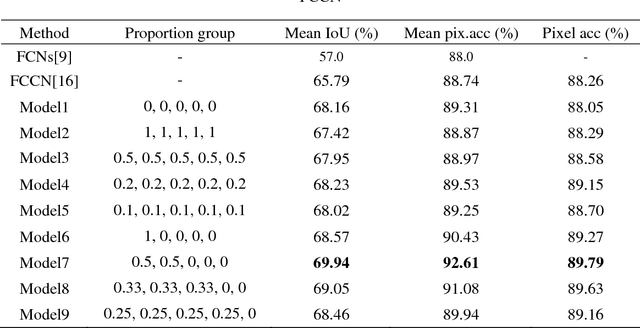

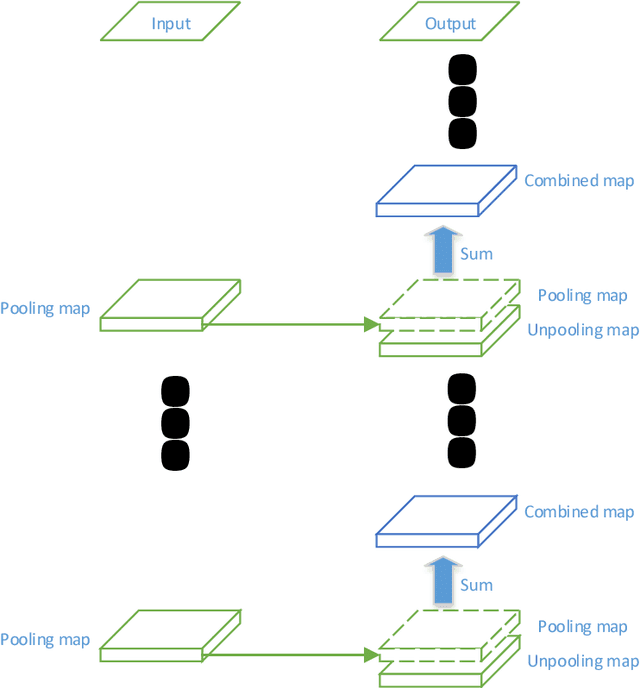

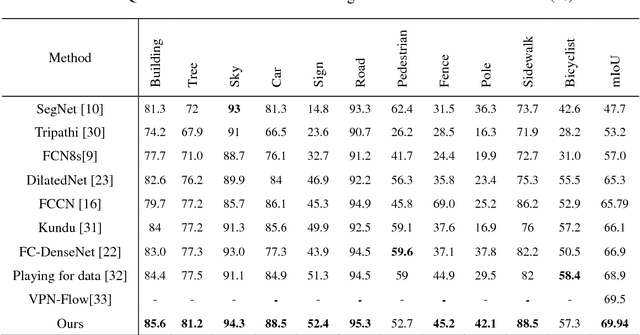

Semantic image segmentation is one of the most challenged tasks in computer vision. In this paper, we propose a highly fused convolutional network, which consists of three parts: feature downsampling, combined feature upsampling and multiple predictions. We adopt a strategy of multiple steps of upsampling and combined feature maps in pooling layers with its corresponding unpooling layers. Then we bring out multiple pre-outputs, each pre-output is generated from an unpooling layer by one-step upsampling. Finally, we concatenate these pre-outputs to get the final output. As a result, our proposed network makes highly use of the feature information by fusing and reusing feature maps. In addition, when training our model, we add multiple soft cost functions on pre-outputs and final outputs. In this way, we can reduce the loss reduction when the loss is back propagated. We evaluate our model on three major segmentation datasets: CamVid, PASCAL VOC and ADE20K. We achieve a state-of-the-art performance on CamVid dataset, as well as considerable improvements on PASCAL VOC dataset and ADE20K dataset

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge