Semantic-aware plant traversability estimation in plant-rich environments for agricultural mobile robots

Paper and Code

Aug 02, 2021

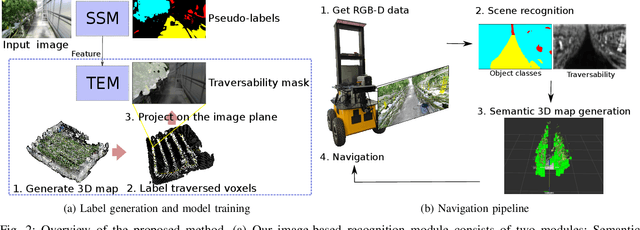

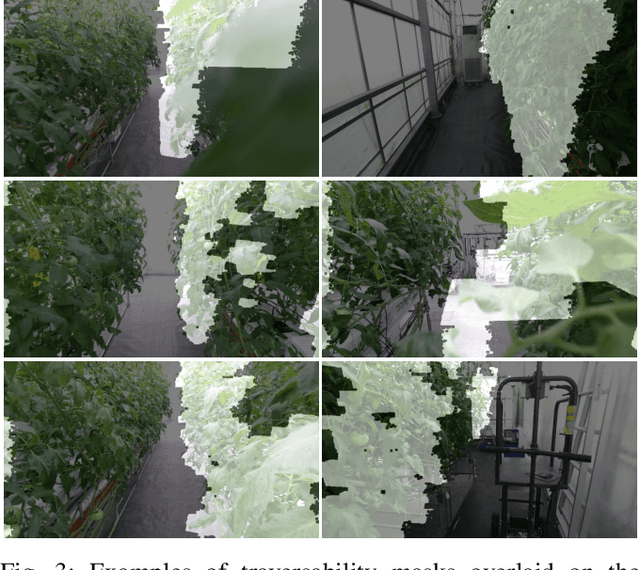

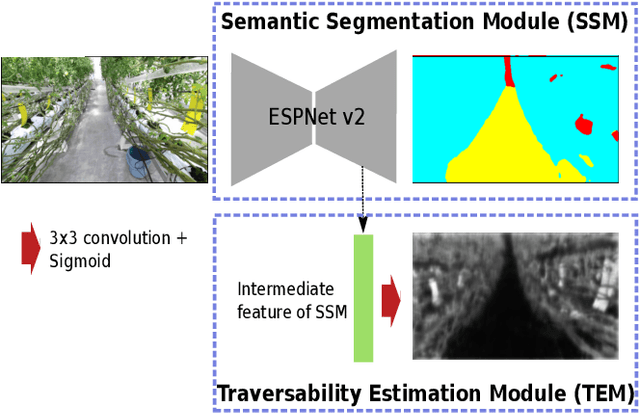

This paper describes a method of estimating the traversability of plant parts covering a path and navigating through them in greenhouses for agricultural mobile robots. Conventional mobile robots rely on scene recognition methods that consider only the presence of objects. Those methods, therefore, cannot recognize paths covered by flexible plants as traversable. In this paper, we present a novel framework of the scene recognition based on image-based semantic segmentation for robot navigation that takes into account the traversable plants covering the paths. In addition, for easily creating training data of the traversability estimation model, we propose a method of generating labels of traversable regions in the images, which we call Traversability masks, based on the robot's traversal experience during the data acquisition phase. It is often difficult for humans to distinguish the traversable plant parts on the images. Our method enables consistent and automatic labeling of those image regions based on the fact of the traversals. We conducted a real world experiment and confirmed that the robot with the proposed recognition method successfully navigated in plant-rich environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge