Semantic Adversarial Perturbations using Learnt Representations

Paper and Code

Jan 29, 2020

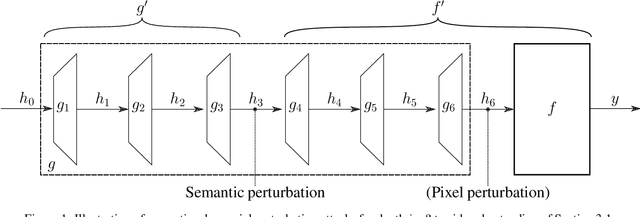

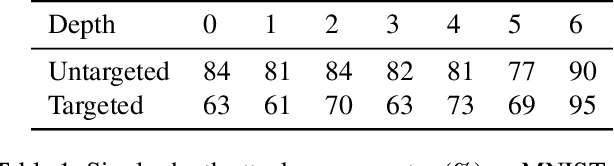

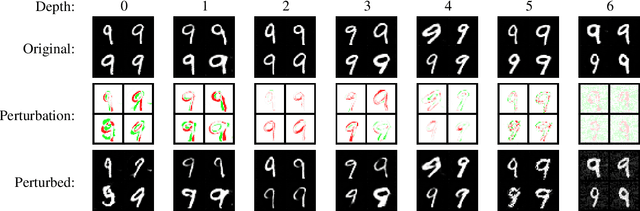

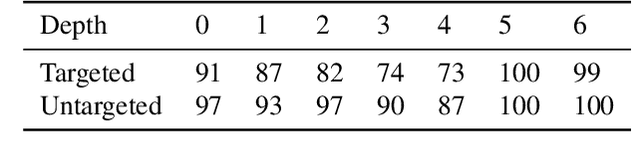

Adversarial examples for image classifiers are typically created by searching for a suitable norm-constrained perturbation to the pixels of an image. However, such perturbations represent only a small and rather contrived subset of possible adversarial inputs; robustness to norm-constrained pixel perturbations alone is insufficient. We introduce a novel method for the construction of a rich new class of semantic adversarial examples. Leveraging the hierarchical feature representations learnt by generative models, our procedure makes adversarial but realistic changes at different levels of semantic granularity. Unlike prior work, this is not an ad-hoc algorithm targeting a fixed category of semantic property. For instance, our approach perturbs the pose, location, size, shape, colour and texture of the objects in an image without manual encoding of these concepts. We demonstrate this new attack by creating semantic adversarial examples that fool state-of-the-art classifiers on the MNIST and ImageNet datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge