Self-Supervised Deep Subspace Clustering with Entropy-norm

Paper and Code

Jun 10, 2022

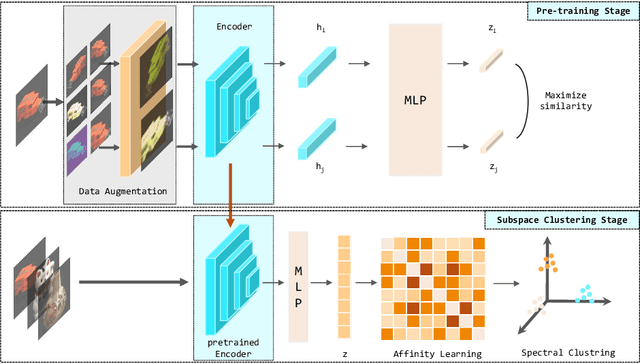

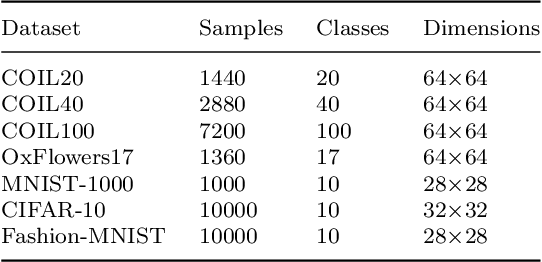

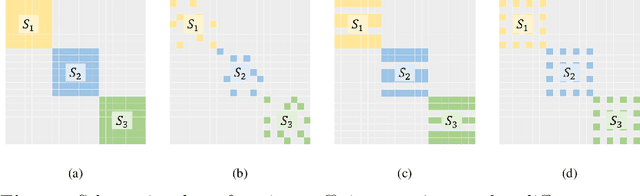

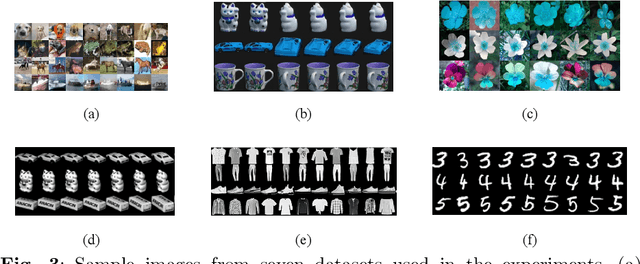

Auto-Encoder based deep subspace clustering (DSC) is widely used in computer vision, motion segmentation and image processing. However, it suffers from the following three issues in the self-expressive matrix learning process: the first one is less useful information for learning self-expressive weights due to the simple reconstruction loss; the second one is that the construction of the self-expression layer associated with the sample size requires high-computational cost; and the last one is the limited connectivity of the existing regularization terms. In order to address these issues, in this paper we propose a novel model named Self-Supervised deep Subspace Clustering with Entropy-norm (S$^{3}$CE). Specifically, S$^{3}$CE exploits a self-supervised contrastive network to gain a more effetive feature vector. The local structure and dense connectivity of the original data benefit from the self-expressive layer and additional entropy-norm constraint. Moreover, a new module with data enhancement is designed to help S$^{3}$CE focus on the key information of data, and improve the clustering performance of positive and negative instances through spectral clustering. Extensive experimental results demonstrate the superior performance of S$^{3}$CE in comparison to the state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge