Self-Repairing Neural Networks: Provable Safety for Deep Networks via Dynamic Repair

Paper and Code

Jul 23, 2021

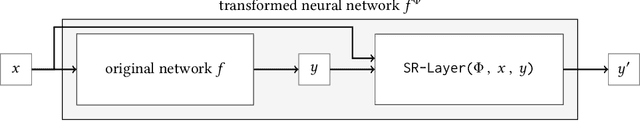

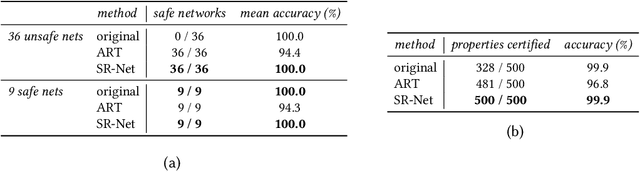

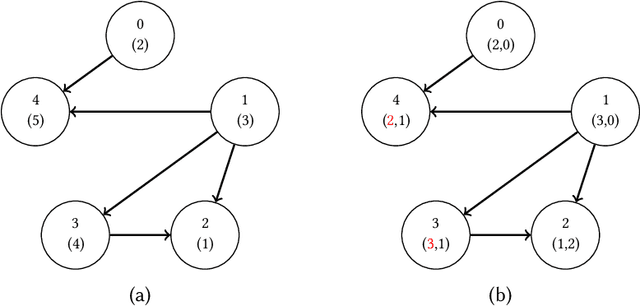

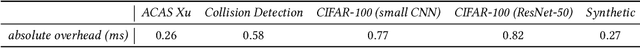

Neural networks are increasingly being deployed in contexts where safety is a critical concern. In this work, we propose a way to construct neural network classifiers that dynamically repair violations of non-relational safety constraints called safe ordering properties. Safe ordering properties relate requirements on the ordering of a network's output indices to conditions on their input, and are sufficient to express most useful notions of non-relational safety for classifiers. Our approach is based on a novel self-repairing layer, which provably yields safe outputs regardless of the characteristics of its input. We compose this layer with an existing network to construct a self-repairing network (SR-Net), and show that in addition to providing safe outputs, the SR-Net is guaranteed to preserve the accuracy of the original network. Notably, our approach is independent of the size and architecture of the network being repaired, depending only on the specified property and the dimension of the network's output; thus it is scalable to large state-of-the-art networks. We show that our approach can be implemented using vectorized computations that execute efficiently on a GPU, introducing run-time overhead of less than one millisecond on current hardware -- even on large, widely-used networks containing hundreds of thousands of neurons and millions of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge