Self-play for Data Efficient Language Acquisition

Paper and Code

Oct 10, 2020

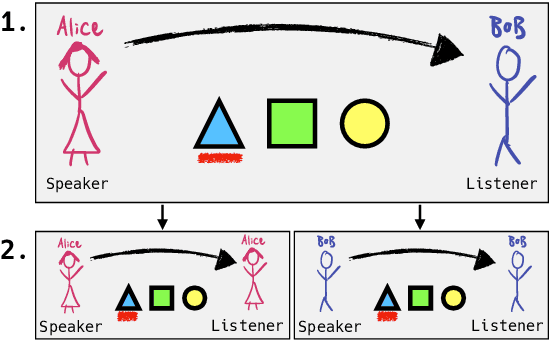

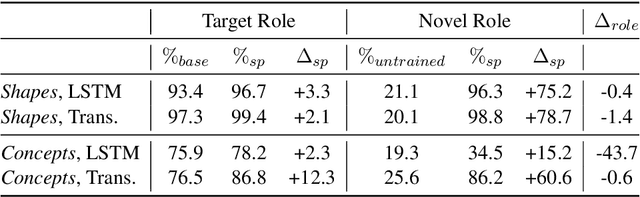

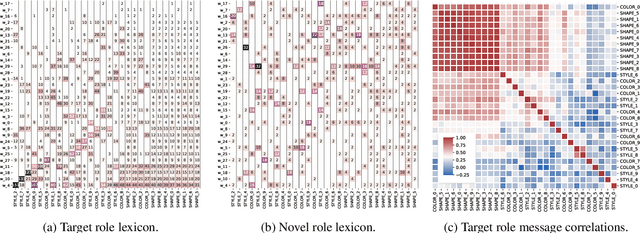

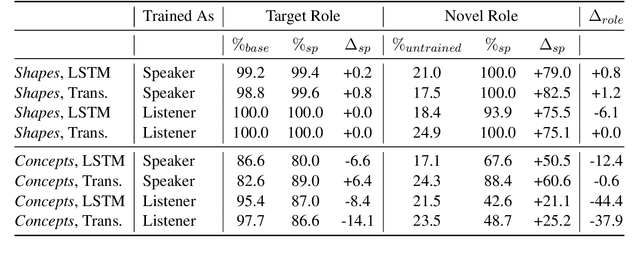

When communicating, people behave consistently across conversational roles: People understand the words they say and are able to produce the words they hear. To date, artificial agents developed for language tasks have lacked such symmetry, meaning agents trained to produce language are unable to understand it and vice-versa. In this work, we exploit the symmetric nature of communication in order to improve both the efficiency and quality of language acquisition in learning agents. Specifically, we consider the setting in which an agent must learn to both understand and generate words in an existing language, but with the assumption that access to interaction with "oracle" speakers of the language is very limited. We show that using self-play as a substitute for direct supervision enables the agent to transfer its knowledge across roles (e.g. training as a listener but testing as a speaker) and make better inferences about the ground truth lexicon using only a handful of interactions with the oracle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge