Self-Interested Agents in Collaborative Learning: An Incentivized Adaptive Data-Centric Framework

Paper and Code

Dec 09, 2024

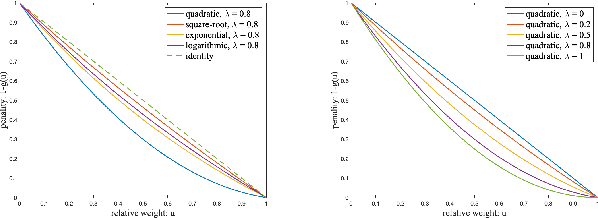

We propose a framework for adaptive data-centric collaborative learning among self-interested agents, coordinated by an arbiter. Designed to handle the incremental nature of real-world data, the framework operates in an online manner: at each step, the arbiter collects a batch of data from agents, trains a machine learning model, and provides each agent with a distinct model reflecting its data contributions. This setup establishes a feedback loop where shared data influence model updates, and the resulting models guide future data-sharing strategies. Agents evaluate and partition their data, selecting a partition to share using a stochastic parameterized policy optimized via policy gradient methods to optimize the utility of the received model as defined by agent-specific evaluation functions. On the arbiter side, the expected loss function over the true data distribution is optimized, incorporating agent-specific weights to account for distributional differences arising from diverse sources and selective sharing. A bilevel optimization algorithm jointly learns the model parameters and agent-specific weights. Mean-zero noise, computed using a distortion function that adjusts these agent-specific weights, is introduced to generate distinct agent-specific models, promoting valuable data sharing without requiring separate training. Our framework is underpinned by non-asymptotic analyses, ensuring convergence of the agent-side policy optimization to an approximate stationary point of the evaluation functions and convergence of the arbiter-side optimization to an approximate stationary point of the expected loss function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge