Self-Attentive Constituency Parsing for UCCA-based Semantic Parsing

Paper and Code

Oct 01, 2021

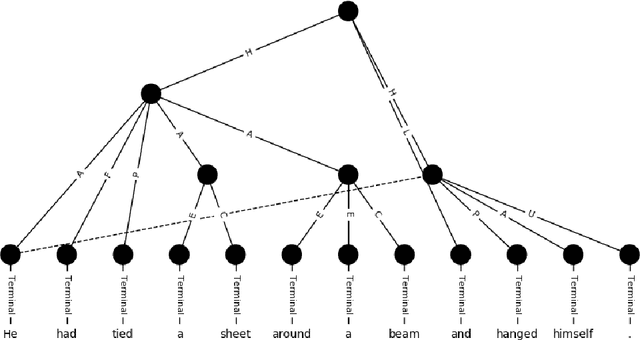

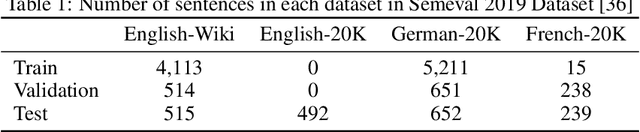

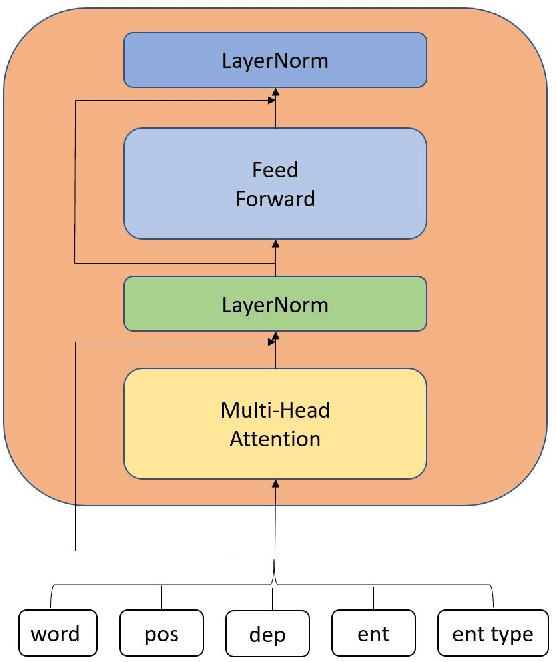

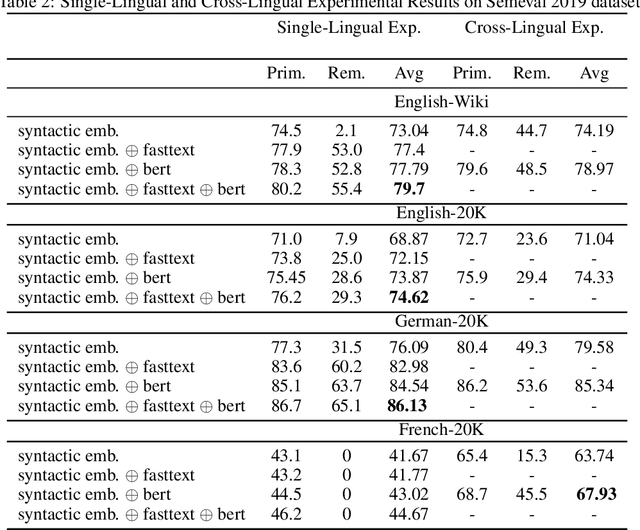

Semantic parsing provides a way to extract the semantic structure of a text that could be understood by machines. It is utilized in various NLP applications that require text comprehension such as summarization and question answering. Graph-based representation is one of the semantic representation approaches to express the semantic structure of a text. Such representations generate expressive and adequate graph-based target structures. In this paper, we focus primarily on UCCA graph-based semantic representation. The paper not only presents the existing approaches proposed for UCCA representation, but also proposes a novel self-attentive neural parsing model for the UCCA representation. We present the results for both single-lingual and cross-lingual tasks using zero-shot and few-shot learning for low-resource languages.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge